Spaces:

Running

on

Zero

Running

on

Zero

Upload 4 files

Browse files- app.py +35 -71

- red_car.png +0 -0

- requirements.txt +3 -2

- supercar.png +0 -0

app.py

CHANGED

|

@@ -10,7 +10,13 @@ from diffusers import StableDiffusionXLPipeline, EDMEulerScheduler, StableDiffus

|

|

| 10 |

from custom_pipeline import CosStableDiffusionXLInstructPix2PixPipeline

|

| 11 |

from huggingface_hub import hf_hub_download

|

| 12 |

from huggingface_hub import InferenceClient

|

|

|

|

| 13 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 14 |

|

| 15 |

help_text = """

|

| 16 |

To optimize image results:

|

|

@@ -37,47 +43,19 @@ def set_timesteps_patched(self, num_inference_steps: int, device = None):

|

|

| 37 |

|

| 38 |

# Image Editor

|

| 39 |

edit_file = hf_hub_download(repo_id="stabilityai/cosxl", filename="cosxl_edit.safetensors")

|

| 40 |

-

normal_file = hf_hub_download(repo_id="stabilityai/cosxl", filename="cosxl.safetensors")

|

| 41 |

-

|

| 42 |

EDMEulerScheduler.set_timesteps = set_timesteps_patched

|

| 43 |

-

|

| 44 |

vae = AutoencoderKL.from_pretrained("madebyollin/sdxl-vae-fp16-fix", torch_dtype=torch.float16)

|

| 45 |

-

|

| 46 |

pipe_edit = StableDiffusionXLInstructPix2PixPipeline.from_single_file(

|

| 47 |

edit_file, num_in_channels=8, is_cosxl_edit=True, vae=vae, torch_dtype=torch.float16,

|

| 48 |

)

|

| 49 |

pipe_edit.scheduler = EDMEulerScheduler(sigma_min=0.002, sigma_max=120.0, sigma_data=1.0, prediction_type="v_prediction")

|

| 50 |

pipe_edit.to("cuda")

|

| 51 |

|

| 52 |

-

from diffusers import StableDiffusionXLPipeline, EulerAncestralDiscreteScheduler

|

| 53 |

-

|

| 54 |

-

if not torch.cuda.is_available():

|

| 55 |

-

DESCRIPTION += "\n<p>Running on CPU 🥶 This demo may not work on CPU.</p>"

|

| 56 |

-

|

| 57 |

-

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

|

| 58 |

-

|

| 59 |

-

|

| 60 |

-

# Image Generator

|

| 61 |

-

if torch.cuda.is_available():

|

| 62 |

-

pipe = StableDiffusionXLPipeline.from_pretrained(

|

| 63 |

-

"fluently/Fluently-XL-v4",

|

| 64 |

-

torch_dtype=torch.float16,

|

| 65 |

-

use_safetensors=True,

|

| 66 |

-

)

|

| 67 |

-

pipe.scheduler = EulerAncestralDiscreteScheduler.from_config(pipe.scheduler.config)

|

| 68 |

-

pipe.load_lora_weights("ehristoforu/dalle-3-xl-v2", weight_name="dalle-3-xl-lora-v2.safetensors", adapter_name="dalle")

|

| 69 |

-

pipe.set_adapters("dalle")

|

| 70 |

-

|

| 71 |

-

def randomize_seed_fn(seed: int, randomize_seed: bool) -> int:

|

| 72 |

-

if randomize_seed:

|

| 73 |

-

seed = random.randint(0, 999999)

|

| 74 |

-

return seed

|

| 75 |

-

|

| 76 |

# Generator

|

| 77 |

@spaces.GPU(duration=30, queue=False)

|

| 78 |

-

def king(type

|

| 79 |

-

input_image

|

| 80 |

-

instruction: str

|

| 81 |

steps: int = 8,

|

| 82 |

randomize_seed: bool = False,

|

| 83 |

seed: int = 25,

|

|

@@ -85,12 +63,13 @@ def king(type = "Image Generation",

|

|

| 85 |

image_cfg_scale: float = 1.7,

|

| 86 |

width: int = 1024,

|

| 87 |

height: int = 1024,

|

| 88 |

-

guidance_scale: float = 6

|

| 89 |

use_resolution_binning: bool = True,

|

| 90 |

progress=gr.Progress(track_tqdm=True),

|

| 91 |

):

|

| 92 |

if type=="Image Editing" :

|

| 93 |

-

|

|

|

|

| 94 |

text_cfg_scale = text_cfg_scale

|

| 95 |

image_cfg_scale = image_cfg_scale

|

| 96 |

input_image = input_image

|

|

@@ -103,49 +82,34 @@ def king(type = "Image Generation",

|

|

| 103 |

num_inference_steps=steps, generator=generator).images[0]

|

| 104 |

return seed, output_image

|

| 105 |

else :

|

| 106 |

-

|

| 107 |

-

|

| 108 |

-

generator = torch.Generator().manual_seed(seed)

|

| 109 |

-

|

| 110 |

-

|

| 111 |

-

|

| 112 |

-

|

| 113 |

-

|

| 114 |

-

|

| 115 |

-

|

| 116 |

-

|

| 117 |

-

|

| 118 |

-

|

| 119 |

-

|

| 120 |

-

|

| 121 |

-

output_image = pipe(**options).images[0]

|

| 122 |

-

return seed, output_image

|

| 123 |

-

|

| 124 |

# Prompt classifier

|

| 125 |

-

def response(instruction, input_image=None):

|

| 126 |

if input_image is None:

|

| 127 |

output="Image Generation"

|

| 128 |

-

yield output

|

| 129 |

else:

|

| 130 |

-

|

| 131 |

-

|

| 132 |

-

|

| 133 |

-

|

| 134 |

-

|

| 135 |

-

|

| 136 |

-

system="[SYSTEM] You will be provided with text, and your task is to classify task is image generation or image editing answer with only task do not say anything else and stop as soon as possible. [TEXT]"

|

| 137 |

-

|

| 138 |

-

formatted_prompt = system + instruction + "[TASK]"

|

| 139 |

-

stream = client.text_generation(formatted_prompt, **generate_kwargs, stream=True, details=True, return_full_text=False)

|

| 140 |

-

output = ""

|

| 141 |

-

for response in stream:

|

| 142 |

-

if not response.token.text == "</s>":

|

| 143 |

-

output += response.token.text

|

| 144 |

-

if "editing" in output:

|

| 145 |

output = "Image Editing"

|

| 146 |

else:

|

| 147 |

output = "Image Generation"

|

| 148 |

-

yield output

|

| 149 |

return output

|

| 150 |

|

| 151 |

css = '''

|

|

@@ -160,7 +124,7 @@ examples=[

|

|

| 160 |

[

|

| 161 |

"Image Generation",

|

| 162 |

None,

|

| 163 |

-

"A

|

| 164 |

|

| 165 |

],

|

| 166 |

[

|

|

@@ -178,7 +142,7 @@ examples=[

|

|

| 178 |

[

|

| 179 |

"Image Generation",

|

| 180 |

None,

|

| 181 |

-

"

|

| 182 |

|

| 183 |

],

|

| 184 |

[

|

|

|

|

| 10 |

from custom_pipeline import CosStableDiffusionXLInstructPix2PixPipeline

|

| 11 |

from huggingface_hub import hf_hub_download

|

| 12 |

from huggingface_hub import InferenceClient

|

| 13 |

+

from diffusers import StableDiffusion3Pipeline, SD3Transformer2DModel, FlowMatchEulerDiscreteScheduler

|

| 14 |

|

| 15 |

+

device = "cuda" if torch.cuda.is_available() else "cpu"

|

| 16 |

+

dtype = torch.float16

|

| 17 |

+

|

| 18 |

+

repo = "stabilityai/stable-diffusion-3-medium-diffusers"

|

| 19 |

+

pipe = StableDiffusion3Pipeline.from_pretrained(repo, torch_dtype=torch.float16).to(device)

|

| 20 |

|

| 21 |

help_text = """

|

| 22 |

To optimize image results:

|

|

|

|

| 43 |

|

| 44 |

# Image Editor

|

| 45 |

edit_file = hf_hub_download(repo_id="stabilityai/cosxl", filename="cosxl_edit.safetensors")

|

|

|

|

|

|

|

| 46 |

EDMEulerScheduler.set_timesteps = set_timesteps_patched

|

|

|

|

| 47 |

vae = AutoencoderKL.from_pretrained("madebyollin/sdxl-vae-fp16-fix", torch_dtype=torch.float16)

|

|

|

|

| 48 |

pipe_edit = StableDiffusionXLInstructPix2PixPipeline.from_single_file(

|

| 49 |

edit_file, num_in_channels=8, is_cosxl_edit=True, vae=vae, torch_dtype=torch.float16,

|

| 50 |

)

|

| 51 |

pipe_edit.scheduler = EDMEulerScheduler(sigma_min=0.002, sigma_max=120.0, sigma_data=1.0, prediction_type="v_prediction")

|

| 52 |

pipe_edit.to("cuda")

|

| 53 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 54 |

# Generator

|

| 55 |

@spaces.GPU(duration=30, queue=False)

|

| 56 |

+

def king(type ,

|

| 57 |

+

input_image ,

|

| 58 |

+

instruction: str ,

|

| 59 |

steps: int = 8,

|

| 60 |

randomize_seed: bool = False,

|

| 61 |

seed: int = 25,

|

|

|

|

| 63 |

image_cfg_scale: float = 1.7,

|

| 64 |

width: int = 1024,

|

| 65 |

height: int = 1024,

|

| 66 |

+

guidance_scale: float = 6,

|

| 67 |

use_resolution_binning: bool = True,

|

| 68 |

progress=gr.Progress(track_tqdm=True),

|

| 69 |

):

|

| 70 |

if type=="Image Editing" :

|

| 71 |

+

if randomize_seed:

|

| 72 |

+

seed = random.randint(0, 99999)

|

| 73 |

text_cfg_scale = text_cfg_scale

|

| 74 |

image_cfg_scale = image_cfg_scale

|

| 75 |

input_image = input_image

|

|

|

|

| 82 |

num_inference_steps=steps, generator=generator).images[0]

|

| 83 |

return seed, output_image

|

| 84 |

else :

|

| 85 |

+

if randomize_seed:

|

| 86 |

+

seed = random.randint(0, 99999)

|

| 87 |

+

generator = torch.Generator().manual_seed(seed)

|

| 88 |

+

image = pipe(

|

| 89 |

+

prompt = instruction,

|

| 90 |

+

guidance_scale = 7,

|

| 91 |

+

num_inference_steps = steps,

|

| 92 |

+

width = width,

|

| 93 |

+

height = height,

|

| 94 |

+

generator = generator

|

| 95 |

+

).images[0]

|

| 96 |

+

return seed, image

|

| 97 |

+

|

| 98 |

+

client = InferenceClient()

|

|

|

|

|

|

|

|

|

|

|

|

|

| 99 |

# Prompt classifier

|

| 100 |

+

def response(instruction, input_image=None ):

|

| 101 |

if input_image is None:

|

| 102 |

output="Image Generation"

|

|

|

|

| 103 |

else:

|

| 104 |

+

text = instruction

|

| 105 |

+

labels = ["Image Editing", "Image Generation"]

|

| 106 |

+

classification = client.zero_shot_classification(text, labels, multi_label=True)

|

| 107 |

+

output = classification[0]

|

| 108 |

+

output = str(output)

|

| 109 |

+

if "Editing" in output:

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 110 |

output = "Image Editing"

|

| 111 |

else:

|

| 112 |

output = "Image Generation"

|

|

|

|

| 113 |

return output

|

| 114 |

|

| 115 |

css = '''

|

|

|

|

| 124 |

[

|

| 125 |

"Image Generation",

|

| 126 |

None,

|

| 127 |

+

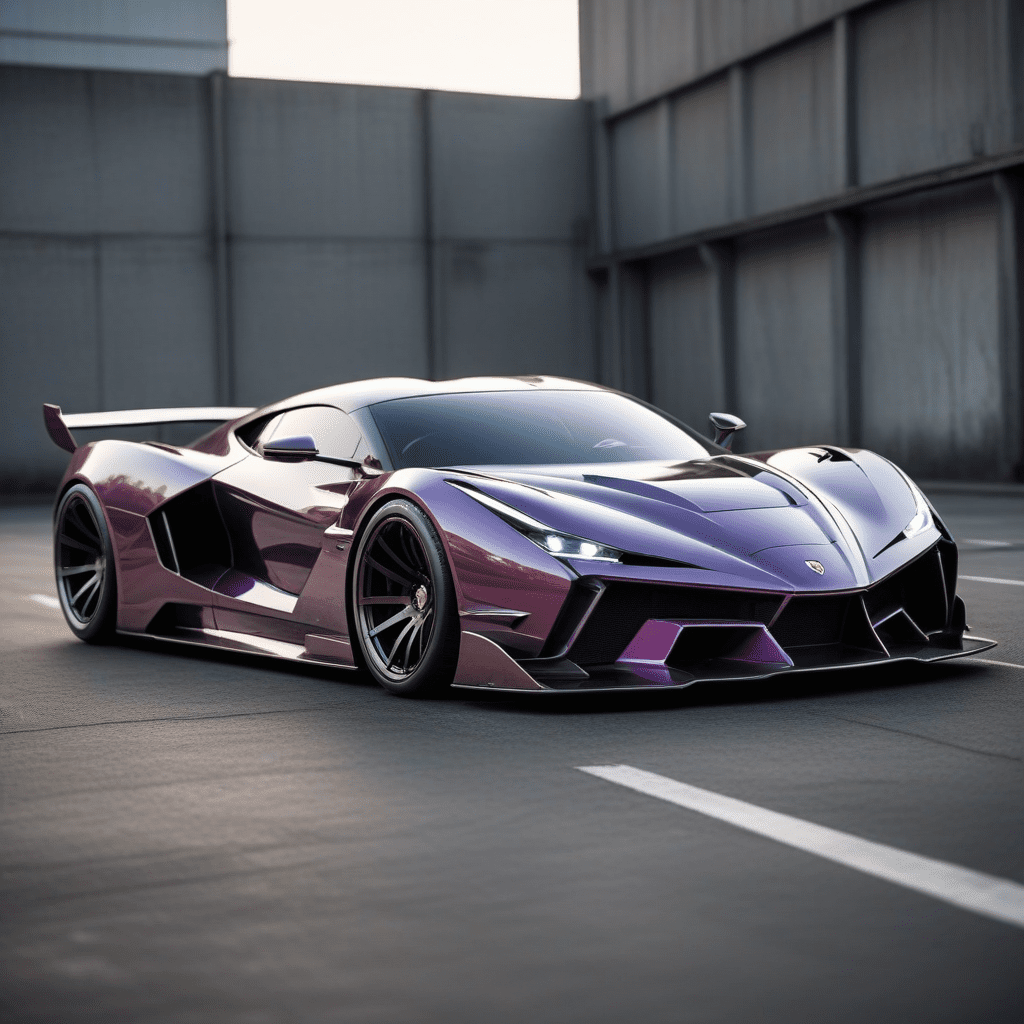

"A luxurious supercar with a unique design. The car should have a pearl white finish, and gold accents. 4k, realistic.",

|

| 128 |

|

| 129 |

],

|

| 130 |

[

|

|

|

|

| 142 |

[

|

| 143 |

"Image Generation",

|

| 144 |

None,

|

| 145 |

+

"Ironman fighting with hulk, wall painting",

|

| 146 |

|

| 147 |

],

|

| 148 |

[

|

red_car.png

CHANGED

|

|

Git LFS Details

|

requirements.txt

CHANGED

|

@@ -5,6 +5,7 @@ numpy

|

|

| 5 |

transformers

|

| 6 |

accelerate

|

| 7 |

safetensors

|

| 8 |

-

diffusers

|

| 9 |

spaces

|

| 10 |

-

peft

|

|

|

|

|

|

| 5 |

transformers

|

| 6 |

accelerate

|

| 7 |

safetensors

|

| 8 |

+

git+https://github.com/huggingface/diffusers.git

|

| 9 |

spaces

|

| 10 |

+

peft

|

| 11 |

+

sentencepiece

|

supercar.png

CHANGED

|

|

Git LFS Details

|