merge origin

Browse files- .gitignore +160 -0

- LICENSE +201 -0

- README.md +26 -1

- app.py +92 -0

- controlnet/lineart/__put_your_lineart_model +0 -0

- convertor.py +102 -0

- output/output.txt +0 -0

- requirements.txt +11 -0

- sd_model.py +64 -0

- starline.py +268 -0

- utils.py +53 -0

.gitignore

ADDED

|

@@ -0,0 +1,160 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Byte-compiled / optimized / DLL files

|

| 2 |

+

__pycache__/

|

| 3 |

+

*.py[cod]

|

| 4 |

+

*$py.class

|

| 5 |

+

|

| 6 |

+

# C extensions

|

| 7 |

+

*.so

|

| 8 |

+

|

| 9 |

+

# Distribution / packaging

|

| 10 |

+

.Python

|

| 11 |

+

build/

|

| 12 |

+

develop-eggs/

|

| 13 |

+

dist/

|

| 14 |

+

downloads/

|

| 15 |

+

eggs/

|

| 16 |

+

.eggs/

|

| 17 |

+

lib/

|

| 18 |

+

lib64/

|

| 19 |

+

parts/

|

| 20 |

+

sdist/

|

| 21 |

+

var/

|

| 22 |

+

wheels/

|

| 23 |

+

share/python-wheels/

|

| 24 |

+

*.egg-info/

|

| 25 |

+

.installed.cfg

|

| 26 |

+

*.egg

|

| 27 |

+

MANIFEST

|

| 28 |

+

|

| 29 |

+

# PyInstaller

|

| 30 |

+

# Usually these files are written by a python script from a template

|

| 31 |

+

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

| 32 |

+

*.manifest

|

| 33 |

+

*.spec

|

| 34 |

+

|

| 35 |

+

# Installer logs

|

| 36 |

+

pip-log.txt

|

| 37 |

+

pip-delete-this-directory.txt

|

| 38 |

+

|

| 39 |

+

# Unit test / coverage reports

|

| 40 |

+

htmlcov/

|

| 41 |

+

.tox/

|

| 42 |

+

.nox/

|

| 43 |

+

.coverage

|

| 44 |

+

.coverage.*

|

| 45 |

+

.cache

|

| 46 |

+

nosetests.xml

|

| 47 |

+

coverage.xml

|

| 48 |

+

*.cover

|

| 49 |

+

*.py,cover

|

| 50 |

+

.hypothesis/

|

| 51 |

+

.pytest_cache/

|

| 52 |

+

cover/

|

| 53 |

+

|

| 54 |

+

# Translations

|

| 55 |

+

*.mo

|

| 56 |

+

*.pot

|

| 57 |

+

|

| 58 |

+

# Django stuff:

|

| 59 |

+

*.log

|

| 60 |

+

local_settings.py

|

| 61 |

+

db.sqlite3

|

| 62 |

+

db.sqlite3-journal

|

| 63 |

+

|

| 64 |

+

# Flask stuff:

|

| 65 |

+

instance/

|

| 66 |

+

.webassets-cache

|

| 67 |

+

|

| 68 |

+

# Scrapy stuff:

|

| 69 |

+

.scrapy

|

| 70 |

+

|

| 71 |

+

# Sphinx documentation

|

| 72 |

+

docs/_build/

|

| 73 |

+

|

| 74 |

+

# PyBuilder

|

| 75 |

+

.pybuilder/

|

| 76 |

+

target/

|

| 77 |

+

|

| 78 |

+

# Jupyter Notebook

|

| 79 |

+

.ipynb_checkpoints

|

| 80 |

+

|

| 81 |

+

# IPython

|

| 82 |

+

profile_default/

|

| 83 |

+

ipython_config.py

|

| 84 |

+

|

| 85 |

+

# pyenv

|

| 86 |

+

# For a library or package, you might want to ignore these files since the code is

|

| 87 |

+

# intended to run in multiple environments; otherwise, check them in:

|

| 88 |

+

# .python-version

|

| 89 |

+

|

| 90 |

+

# pipenv

|

| 91 |

+

# According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

|

| 92 |

+

# However, in case of collaboration, if having platform-specific dependencies or dependencies

|

| 93 |

+

# having no cross-platform support, pipenv may install dependencies that don't work, or not

|

| 94 |

+

# install all needed dependencies.

|

| 95 |

+

#Pipfile.lock

|

| 96 |

+

|

| 97 |

+

# poetry

|

| 98 |

+

# Similar to Pipfile.lock, it is generally recommended to include poetry.lock in version control.

|

| 99 |

+

# This is especially recommended for binary packages to ensure reproducibility, and is more

|

| 100 |

+

# commonly ignored for libraries.

|

| 101 |

+

# https://python-poetry.org/docs/basic-usage/#commit-your-poetrylock-file-to-version-control

|

| 102 |

+

#poetry.lock

|

| 103 |

+

|

| 104 |

+

# pdm

|

| 105 |

+

# Similar to Pipfile.lock, it is generally recommended to include pdm.lock in version control.

|

| 106 |

+

#pdm.lock

|

| 107 |

+

# pdm stores project-wide configurations in .pdm.toml, but it is recommended to not include it

|

| 108 |

+

# in version control.

|

| 109 |

+

# https://pdm.fming.dev/#use-with-ide

|

| 110 |

+

.pdm.toml

|

| 111 |

+

|

| 112 |

+

# PEP 582; used by e.g. github.com/David-OConnor/pyflow and github.com/pdm-project/pdm

|

| 113 |

+

__pypackages__/

|

| 114 |

+

|

| 115 |

+

# Celery stuff

|

| 116 |

+

celerybeat-schedule

|

| 117 |

+

celerybeat.pid

|

| 118 |

+

|

| 119 |

+

# SageMath parsed files

|

| 120 |

+

*.sage.py

|

| 121 |

+

|

| 122 |

+

# Environments

|

| 123 |

+

.env

|

| 124 |

+

.venv

|

| 125 |

+

env/

|

| 126 |

+

venv/

|

| 127 |

+

ENV/

|

| 128 |

+

env.bak/

|

| 129 |

+

venv.bak/

|

| 130 |

+

|

| 131 |

+

# Spyder project settings

|

| 132 |

+

.spyderproject

|

| 133 |

+

.spyproject

|

| 134 |

+

|

| 135 |

+

# Rope project settings

|

| 136 |

+

.ropeproject

|

| 137 |

+

|

| 138 |

+

# mkdocs documentation

|

| 139 |

+

/site

|

| 140 |

+

|

| 141 |

+

# mypy

|

| 142 |

+

.mypy_cache/

|

| 143 |

+

.dmypy.json

|

| 144 |

+

dmypy.json

|

| 145 |

+

|

| 146 |

+

# Pyre type checker

|

| 147 |

+

.pyre/

|

| 148 |

+

|

| 149 |

+

# pytype static type analyzer

|

| 150 |

+

.pytype/

|

| 151 |

+

|

| 152 |

+

# Cython debug symbols

|

| 153 |

+

cython_debug/

|

| 154 |

+

|

| 155 |

+

# PyCharm

|

| 156 |

+

# JetBrains specific template is maintained in a separate JetBrains.gitignore that can

|

| 157 |

+

# be found at https://github.com/github/gitignore/blob/main/Global/JetBrains.gitignore

|

| 158 |

+

# and can be added to the global gitignore or merged into this file. For a more nuclear

|

| 159 |

+

# option (not recommended) you can uncomment the following to ignore the entire idea folder.

|

| 160 |

+

#.idea/

|

LICENSE

ADDED

|

@@ -0,0 +1,201 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Apache License

|

| 2 |

+

Version 2.0, January 2004

|

| 3 |

+

http://www.apache.org/licenses/

|

| 4 |

+

|

| 5 |

+

TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

|

| 6 |

+

|

| 7 |

+

1. Definitions.

|

| 8 |

+

|

| 9 |

+

"License" shall mean the terms and conditions for use, reproduction,

|

| 10 |

+

and distribution as defined by Sections 1 through 9 of this document.

|

| 11 |

+

|

| 12 |

+

"Licensor" shall mean the copyright owner or entity authorized by

|

| 13 |

+

the copyright owner that is granting the License.

|

| 14 |

+

|

| 15 |

+

"Legal Entity" shall mean the union of the acting entity and all

|

| 16 |

+

other entities that control, are controlled by, or are under common

|

| 17 |

+

control with that entity. For the purposes of this definition,

|

| 18 |

+

"control" means (i) the power, direct or indirect, to cause the

|

| 19 |

+

direction or management of such entity, whether by contract or

|

| 20 |

+

otherwise, or (ii) ownership of fifty percent (50%) or more of the

|

| 21 |

+

outstanding shares, or (iii) beneficial ownership of such entity.

|

| 22 |

+

|

| 23 |

+

"You" (or "Your") shall mean an individual or Legal Entity

|

| 24 |

+

exercising permissions granted by this License.

|

| 25 |

+

|

| 26 |

+

"Source" form shall mean the preferred form for making modifications,

|

| 27 |

+

including but not limited to software source code, documentation

|

| 28 |

+

source, and configuration files.

|

| 29 |

+

|

| 30 |

+

"Object" form shall mean any form resulting from mechanical

|

| 31 |

+

transformation or translation of a Source form, including but

|

| 32 |

+

not limited to compiled object code, generated documentation,

|

| 33 |

+

and conversions to other media types.

|

| 34 |

+

|

| 35 |

+

"Work" shall mean the work of authorship, whether in Source or

|

| 36 |

+

Object form, made available under the License, as indicated by a

|

| 37 |

+

copyright notice that is included in or attached to the work

|

| 38 |

+

(an example is provided in the Appendix below).

|

| 39 |

+

|

| 40 |

+

"Derivative Works" shall mean any work, whether in Source or Object

|

| 41 |

+

form, that is based on (or derived from) the Work and for which the

|

| 42 |

+

editorial revisions, annotations, elaborations, or other modifications

|

| 43 |

+

represent, as a whole, an original work of authorship. For the purposes

|

| 44 |

+

of this License, Derivative Works shall not include works that remain

|

| 45 |

+

separable from, or merely link (or bind by name) to the interfaces of,

|

| 46 |

+

the Work and Derivative Works thereof.

|

| 47 |

+

|

| 48 |

+

"Contribution" shall mean any work of authorship, including

|

| 49 |

+

the original version of the Work and any modifications or additions

|

| 50 |

+

to that Work or Derivative Works thereof, that is intentionally

|

| 51 |

+

submitted to Licensor for inclusion in the Work by the copyright owner

|

| 52 |

+

or by an individual or Legal Entity authorized to submit on behalf of

|

| 53 |

+

the copyright owner. For the purposes of this definition, "submitted"

|

| 54 |

+

means any form of electronic, verbal, or written communication sent

|

| 55 |

+

to the Licensor or its representatives, including but not limited to

|

| 56 |

+

communication on electronic mailing lists, source code control systems,

|

| 57 |

+

and issue tracking systems that are managed by, or on behalf of, the

|

| 58 |

+

Licensor for the purpose of discussing and improving the Work, but

|

| 59 |

+

excluding communication that is conspicuously marked or otherwise

|

| 60 |

+

designated in writing by the copyright owner as "Not a Contribution."

|

| 61 |

+

|

| 62 |

+

"Contributor" shall mean Licensor and any individual or Legal Entity

|

| 63 |

+

on behalf of whom a Contribution has been received by Licensor and

|

| 64 |

+

subsequently incorporated within the Work.

|

| 65 |

+

|

| 66 |

+

2. Grant of Copyright License. Subject to the terms and conditions of

|

| 67 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 68 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 69 |

+

copyright license to reproduce, prepare Derivative Works of,

|

| 70 |

+

publicly display, publicly perform, sublicense, and distribute the

|

| 71 |

+

Work and such Derivative Works in Source or Object form.

|

| 72 |

+

|

| 73 |

+

3. Grant of Patent License. Subject to the terms and conditions of

|

| 74 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 75 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 76 |

+

(except as stated in this section) patent license to make, have made,

|

| 77 |

+

use, offer to sell, sell, import, and otherwise transfer the Work,

|

| 78 |

+

where such license applies only to those patent claims licensable

|

| 79 |

+

by such Contributor that are necessarily infringed by their

|

| 80 |

+

Contribution(s) alone or by combination of their Contribution(s)

|

| 81 |

+

with the Work to which such Contribution(s) was submitted. If You

|

| 82 |

+

institute patent litigation against any entity (including a

|

| 83 |

+

cross-claim or counterclaim in a lawsuit) alleging that the Work

|

| 84 |

+

or a Contribution incorporated within the Work constitutes direct

|

| 85 |

+

or contributory patent infringement, then any patent licenses

|

| 86 |

+

granted to You under this License for that Work shall terminate

|

| 87 |

+

as of the date such litigation is filed.

|

| 88 |

+

|

| 89 |

+

4. Redistribution. You may reproduce and distribute copies of the

|

| 90 |

+

Work or Derivative Works thereof in any medium, with or without

|

| 91 |

+

modifications, and in Source or Object form, provided that You

|

| 92 |

+

meet the following conditions:

|

| 93 |

+

|

| 94 |

+

(a) You must give any other recipients of the Work or

|

| 95 |

+

Derivative Works a copy of this License; and

|

| 96 |

+

|

| 97 |

+

(b) You must cause any modified files to carry prominent notices

|

| 98 |

+

stating that You changed the files; and

|

| 99 |

+

|

| 100 |

+

(c) You must retain, in the Source form of any Derivative Works

|

| 101 |

+

that You distribute, all copyright, patent, trademark, and

|

| 102 |

+

attribution notices from the Source form of the Work,

|

| 103 |

+

excluding those notices that do not pertain to any part of

|

| 104 |

+

the Derivative Works; and

|

| 105 |

+

|

| 106 |

+

(d) If the Work includes a "NOTICE" text file as part of its

|

| 107 |

+

distribution, then any Derivative Works that You distribute must

|

| 108 |

+

include a readable copy of the attribution notices contained

|

| 109 |

+

within such NOTICE file, excluding those notices that do not

|

| 110 |

+

pertain to any part of the Derivative Works, in at least one

|

| 111 |

+

of the following places: within a NOTICE text file distributed

|

| 112 |

+

as part of the Derivative Works; within the Source form or

|

| 113 |

+

documentation, if provided along with the Derivative Works; or,

|

| 114 |

+

within a display generated by the Derivative Works, if and

|

| 115 |

+

wherever such third-party notices normally appear. The contents

|

| 116 |

+

of the NOTICE file are for informational purposes only and

|

| 117 |

+

do not modify the License. You may add Your own attribution

|

| 118 |

+

notices within Derivative Works that You distribute, alongside

|

| 119 |

+

or as an addendum to the NOTICE text from the Work, provided

|

| 120 |

+

that such additional attribution notices cannot be construed

|

| 121 |

+

as modifying the License.

|

| 122 |

+

|

| 123 |

+

You may add Your own copyright statement to Your modifications and

|

| 124 |

+

may provide additional or different license terms and conditions

|

| 125 |

+

for use, reproduction, or distribution of Your modifications, or

|

| 126 |

+

for any such Derivative Works as a whole, provided Your use,

|

| 127 |

+

reproduction, and distribution of the Work otherwise complies with

|

| 128 |

+

the conditions stated in this License.

|

| 129 |

+

|

| 130 |

+

5. Submission of Contributions. Unless You explicitly state otherwise,

|

| 131 |

+

any Contribution intentionally submitted for inclusion in the Work

|

| 132 |

+

by You to the Licensor shall be under the terms and conditions of

|

| 133 |

+

this License, without any additional terms or conditions.

|

| 134 |

+

Notwithstanding the above, nothing herein shall supersede or modify

|

| 135 |

+

the terms of any separate license agreement you may have executed

|

| 136 |

+

with Licensor regarding such Contributions.

|

| 137 |

+

|

| 138 |

+

6. Trademarks. This License does not grant permission to use the trade

|

| 139 |

+

names, trademarks, service marks, or product names of the Licensor,

|

| 140 |

+

except as required for reasonable and customary use in describing the

|

| 141 |

+

origin of the Work and reproducing the content of the NOTICE file.

|

| 142 |

+

|

| 143 |

+

7. Disclaimer of Warranty. Unless required by applicable law or

|

| 144 |

+

agreed to in writing, Licensor provides the Work (and each

|

| 145 |

+

Contributor provides its Contributions) on an "AS IS" BASIS,

|

| 146 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

|

| 147 |

+

implied, including, without limitation, any warranties or conditions

|

| 148 |

+

of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

|

| 149 |

+

PARTICULAR PURPOSE. You are solely responsible for determining the

|

| 150 |

+

appropriateness of using or redistributing the Work and assume any

|

| 151 |

+

risks associated with Your exercise of permissions under this License.

|

| 152 |

+

|

| 153 |

+

8. Limitation of Liability. In no event and under no legal theory,

|

| 154 |

+

whether in tort (including negligence), contract, or otherwise,

|

| 155 |

+

unless required by applicable law (such as deliberate and grossly

|

| 156 |

+

negligent acts) or agreed to in writing, shall any Contributor be

|

| 157 |

+

liable to You for damages, including any direct, indirect, special,

|

| 158 |

+

incidental, or consequential damages of any character arising as a

|

| 159 |

+

result of this License or out of the use or inability to use the

|

| 160 |

+

Work (including but not limited to damages for loss of goodwill,

|

| 161 |

+

work stoppage, computer failure or malfunction, or any and all

|

| 162 |

+

other commercial damages or losses), even if such Contributor

|

| 163 |

+

has been advised of the possibility of such damages.

|

| 164 |

+

|

| 165 |

+

9. Accepting Warranty or Additional Liability. While redistributing

|

| 166 |

+

the Work or Derivative Works thereof, You may choose to offer,

|

| 167 |

+

and charge a fee for, acceptance of support, warranty, indemnity,

|

| 168 |

+

or other liability obligations and/or rights consistent with this

|

| 169 |

+

License. However, in accepting such obligations, You may act only

|

| 170 |

+

on Your own behalf and on Your sole responsibility, not on behalf

|

| 171 |

+

of any other Contributor, and only if You agree to indemnify,

|

| 172 |

+

defend, and hold each Contributor harmless for any liability

|

| 173 |

+

incurred by, or claims asserted against, such Contributor by reason

|

| 174 |

+

of your accepting any such warranty or additional liability.

|

| 175 |

+

|

| 176 |

+

END OF TERMS AND CONDITIONS

|

| 177 |

+

|

| 178 |

+

APPENDIX: How to apply the Apache License to your work.

|

| 179 |

+

|

| 180 |

+

To apply the Apache License to your work, attach the following

|

| 181 |

+

boilerplate notice, with the fields enclosed by brackets "[]"

|

| 182 |

+

replaced with your own identifying information. (Don't include

|

| 183 |

+

the brackets!) The text should be enclosed in the appropriate

|

| 184 |

+

comment syntax for the file format. We also recommend that a

|

| 185 |

+

file or class name and description of purpose be included on the

|

| 186 |

+

same "printed page" as the copyright notice for easier

|

| 187 |

+

identification within third-party archives.

|

| 188 |

+

|

| 189 |

+

Copyright [yyyy] [name of copyright owner]

|

| 190 |

+

|

| 191 |

+

Licensed under the Apache License, Version 2.0 (the "License");

|

| 192 |

+

you may not use this file except in compliance with the License.

|

| 193 |

+

You may obtain a copy of the License at

|

| 194 |

+

|

| 195 |

+

http://www.apache.org/licenses/LICENSE-2.0

|

| 196 |

+

|

| 197 |

+

Unless required by applicable law or agreed to in writing, software

|

| 198 |

+

distributed under the License is distributed on an "AS IS" BASIS,

|

| 199 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 200 |

+

See the License for the specific language governing permissions and

|

| 201 |

+

limitations under the License.

|

README.md

CHANGED

|

@@ -9,4 +9,29 @@ app_file: app.py

|

|

| 9 |

pinned: false

|

| 10 |

---

|

| 11 |

|

| 12 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 9 |

pinned: false

|

| 10 |

---

|

| 11 |

|

| 12 |

+

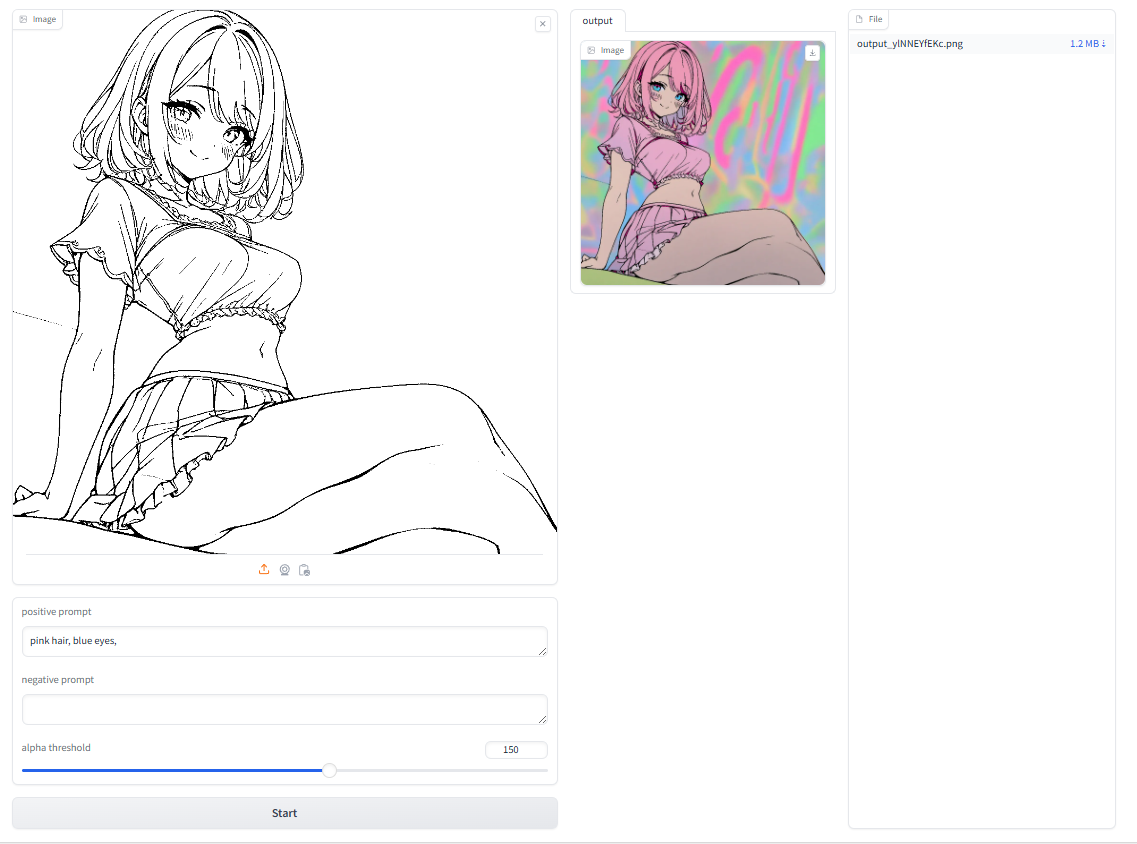

# starline

|

| 13 |

+

**St**rict coloring m**a**chine fo**r** **line** drawings.

|

| 14 |

+

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

|

| 19 |

+

https://github.com/mattyamonaca/starline/assets/48423148/8199c65c-a19f-42e9-aab7-df5ed6ef5b4c

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

# Usage

|

| 23 |

+

- ```python app.py```

|

| 24 |

+

- Input the line drawing you wish to color (The background should be transparent).

|

| 25 |

+

- Input a prompt describing the color you want to add.

|

| 26 |

+

|

| 27 |

+

- 背景を透過した状態で線画を入力します

|

| 28 |

+

- 付けたい色を説明するプロンプトを入力します

|

| 29 |

+

|

| 30 |

+

# Precautions

|

| 31 |

+

- Image size 1024 x 1024 is recommended.

|

| 32 |

+

- Aliasing is a beta version.

|

| 33 |

+

- Areas finely surrounded by line drawings cannot be colored.

|

| 34 |

+

|

| 35 |

+

- 画像サイズは1024×1024を推奨します

|

| 36 |

+

- エイリアス処理はβ版です。より線画に忠実であることを求める場合は2値線画を推奨します

|

| 37 |

+

- 線画で細かく囲まれた部分は着色できません。着色できない部分は透過した状態で出力されます。

|

app.py

ADDED

|

@@ -0,0 +1,92 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import gradio as gr

|

| 2 |

+

import sys

|

| 3 |

+

from starline import process

|

| 4 |

+

|

| 5 |

+

from utils import load_cn_model, load_cn_config, randomname

|

| 6 |

+

from convertor import pil2cv, cv2pil

|

| 7 |

+

|

| 8 |

+

from sd_model import get_cn_pipeline, generate, get_cn_detector

|

| 9 |

+

import cv2

|

| 10 |

+

import os

|

| 11 |

+

import numpy as np

|

| 12 |

+

from PIL import Image

|

| 13 |

+

|

| 14 |

+

|

| 15 |

+

path = os.getcwd()

|

| 16 |

+

output_dir = f"{path}/output"

|

| 17 |

+

input_dir = f"{path}/input"

|

| 18 |

+

cn_lineart_dir = f"{path}/controlnet/lineart"

|

| 19 |

+

|

| 20 |

+

load_cn_model(cn_lineart_dir)

|

| 21 |

+

load_cn_config(cn_lineart_dir)

|

| 22 |

+

|

| 23 |

+

class webui:

|

| 24 |

+

def __init__(self):

|

| 25 |

+

self.demo = gr.Blocks()

|

| 26 |

+

|

| 27 |

+

def undercoat(self, input_image, pos_prompt, neg_prompt, alpha_th):

|

| 28 |

+

org_line_image = input_image

|

| 29 |

+

image = pil2cv(input_image)

|

| 30 |

+

image = cv2.cvtColor(image, cv2.COLOR_BGRA2RGBA)

|

| 31 |

+

|

| 32 |

+

index = np.where(image[:, :, 3] == 0)

|

| 33 |

+

image[index] = [255, 255, 255, 255]

|

| 34 |

+

input_image = cv2pil(image)

|

| 35 |

+

|

| 36 |

+

pipe = get_cn_pipeline()

|

| 37 |

+

detectors = get_cn_detector(input_image.resize((1024, 1024), Image.ANTIALIAS))

|

| 38 |

+

|

| 39 |

+

|

| 40 |

+

gen_image = generate(pipe, detectors, pos_prompt, neg_prompt)

|

| 41 |

+

output = process(gen_image.resize((image.shape[1], image.shape[0]), Image.ANTIALIAS) , org_line_image, alpha_th)

|

| 42 |

+

|

| 43 |

+

output = output.resize((image.shape[1], image.shape[0]) , Image.ANTIALIAS)

|

| 44 |

+

|

| 45 |

+

|

| 46 |

+

output = Image.alpha_composite(output, org_line_image)

|

| 47 |

+

name = randomname(10)

|

| 48 |

+

output.save(f"{output_dir}/output_{name}.png")

|

| 49 |

+

#output = pil2cv(output)

|

| 50 |

+

file_name = f"{output_dir}/output_{name}.png"

|

| 51 |

+

|

| 52 |

+

return output, file_name

|

| 53 |

+

|

| 54 |

+

|

| 55 |

+

|

| 56 |

+

def launch(self, share):

|

| 57 |

+

with self.demo:

|

| 58 |

+

with gr.Row():

|

| 59 |

+

with gr.Column():

|

| 60 |

+

input_image = gr.Image(type="pil", image_mode="RGBA")

|

| 61 |

+

|

| 62 |

+

pos_prompt = gr.Textbox(max_lines=1000, label="positive prompt")

|

| 63 |

+

neg_prompt = gr.Textbox(max_lines=1000, label="negative prompt")

|

| 64 |

+

|

| 65 |

+

alpha_th = gr.Slider(maximum = 255, value=100, label = "alpha threshold")

|

| 66 |

+

|

| 67 |

+

submit = gr.Button(value="Start")

|

| 68 |

+

with gr.Row():

|

| 69 |

+

with gr.Column():

|

| 70 |

+

with gr.Tab("output"):

|

| 71 |

+

output_0 = gr.Image()

|

| 72 |

+

|

| 73 |

+

output_file = gr.File()

|

| 74 |

+

submit.click(

|

| 75 |

+

self.undercoat,

|

| 76 |

+

inputs=[input_image, pos_prompt, neg_prompt, alpha_th],

|

| 77 |

+

outputs=[output_0, output_file]

|

| 78 |

+

)

|

| 79 |

+

|

| 80 |

+

self.demo.queue()

|

| 81 |

+

self.demo.launch(share=share)

|

| 82 |

+

|

| 83 |

+

|

| 84 |

+

if __name__ == "__main__":

|

| 85 |

+

ui = webui()

|

| 86 |

+

if len(sys.argv) > 1:

|

| 87 |

+

if sys.argv[1] == "share":

|

| 88 |

+

ui.launch(share=True)

|

| 89 |

+

else:

|

| 90 |

+

ui.launch(share=False)

|

| 91 |

+

else:

|

| 92 |

+

ui.launch(share=False)

|

controlnet/lineart/__put_your_lineart_model

ADDED

|

File without changes

|

convertor.py

ADDED

|

@@ -0,0 +1,102 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import pandas as pd

|

| 2 |

+

import numpy as np

|

| 3 |

+

from skimage import color

|

| 4 |

+

from PIL import Image

|

| 5 |

+

|

| 6 |

+

|

| 7 |

+

def skimage_rgb2lab(rgb):

|

| 8 |

+

return color.rgb2lab(rgb.reshape(1,1,3))

|

| 9 |

+

|

| 10 |

+

|

| 11 |

+

def rgb2df(img):

|

| 12 |

+

h, w, _ = img.shape

|

| 13 |

+

x_l, y_l = np.meshgrid(np.arange(h), np.arange(w), indexing='ij')

|

| 14 |

+

r, g, b = img[:,:,0], img[:,:,1], img[:,:,2]

|

| 15 |

+

df = pd.DataFrame({

|

| 16 |

+

"x_l": x_l.ravel(),

|

| 17 |

+

"y_l": y_l.ravel(),

|

| 18 |

+

"r": r.ravel(),

|

| 19 |

+

"g": g.ravel(),

|

| 20 |

+

"b": b.ravel(),

|

| 21 |

+

})

|

| 22 |

+

return df

|

| 23 |

+

|

| 24 |

+

def mask2df(mask):

|

| 25 |

+

h, w = mask.shape

|

| 26 |

+

x_l, y_l = np.meshgrid(np.arange(h), np.arange(w), indexing='ij')

|

| 27 |

+

flg = mask.astype(int)

|

| 28 |

+

df = pd.DataFrame({

|

| 29 |

+

"x_l_m": x_l.ravel(),

|

| 30 |

+

"y_l_m": y_l.ravel(),

|

| 31 |

+

"m_flg": flg.ravel(),

|

| 32 |

+

})

|

| 33 |

+

return df

|

| 34 |

+

|

| 35 |

+

|

| 36 |

+

def rgba2df(img):

|

| 37 |

+

h, w, _ = img.shape

|

| 38 |

+

x_l, y_l = np.meshgrid(np.arange(h), np.arange(w), indexing='ij')

|

| 39 |

+

r, g, b, a = img[:,:,0], img[:,:,1], img[:,:,2], img[:,:,3]

|

| 40 |

+

df = pd.DataFrame({

|

| 41 |

+

"x_l": x_l.ravel(),

|

| 42 |

+

"y_l": y_l.ravel(),

|

| 43 |

+

"r": r.ravel(),

|

| 44 |

+

"g": g.ravel(),

|

| 45 |

+

"b": b.ravel(),

|

| 46 |

+

"a": a.ravel()

|

| 47 |

+

})

|

| 48 |

+

return df

|

| 49 |

+

|

| 50 |

+

def hsv2df(img):

|

| 51 |

+

x_l, y_l = np.meshgrid(np.arange(img.shape[0]), np.arange(img.shape[1]), indexing='ij')

|

| 52 |

+

h, s, v = np.transpose(img, (2, 0, 1))

|

| 53 |

+

df = pd.DataFrame({'x_l': x_l.flatten(), 'y_l': y_l.flatten(), 'h': h.flatten(), 's': s.flatten(), 'v': v.flatten()})

|

| 54 |

+

return df

|

| 55 |

+

|

| 56 |

+

def df2rgba(img_df):

|

| 57 |

+

r_img = img_df.pivot_table(index="x_l", columns="y_l",values= "r").reset_index(drop=True).values

|

| 58 |

+

g_img = img_df.pivot_table(index="x_l", columns="y_l",values= "g").reset_index(drop=True).values

|

| 59 |

+

b_img = img_df.pivot_table(index="x_l", columns="y_l",values= "b").reset_index(drop=True).values

|

| 60 |

+

a_img = img_df.pivot_table(index="x_l", columns="y_l",values= "a").reset_index(drop=True).values

|

| 61 |

+

df_img = np.stack([r_img, g_img, b_img, a_img], 2).astype(np.uint8)

|

| 62 |

+

return df_img

|

| 63 |

+

|

| 64 |

+

def df2bgra(img_df):

|

| 65 |

+

r_img = img_df.pivot_table(index="x_l", columns="y_l",values= "r").reset_index(drop=True).values

|

| 66 |

+

g_img = img_df.pivot_table(index="x_l", columns="y_l",values= "g").reset_index(drop=True).values

|

| 67 |

+

b_img = img_df.pivot_table(index="x_l", columns="y_l",values= "b").reset_index(drop=True).values

|

| 68 |

+

a_img = img_df.pivot_table(index="x_l", columns="y_l",values= "a").reset_index(drop=True).values

|

| 69 |

+

df_img = np.stack([b_img, g_img, r_img, a_img], 2).astype(np.uint8)

|

| 70 |

+

return df_img

|

| 71 |

+

|

| 72 |

+

def df2rgb(img_df):

|

| 73 |

+

r_img = img_df.pivot_table(index="x_l", columns="y_l",values= "r").reset_index(drop=True).values

|

| 74 |

+

g_img = img_df.pivot_table(index="x_l", columns="y_l",values= "g").reset_index(drop=True).values

|

| 75 |

+

b_img = img_df.pivot_table(index="x_l", columns="y_l",values= "b").reset_index(drop=True).values

|

| 76 |

+

df_img = np.stack([r_img, g_img, b_img], 2).astype(np.uint8)

|

| 77 |

+

return df_img

|

| 78 |

+

|

| 79 |

+

def pil2cv(image):

|

| 80 |

+

new_image = np.array(image, dtype=np.uint8)

|

| 81 |

+

if new_image.ndim == 2:

|

| 82 |

+

pass

|

| 83 |

+

elif new_image.shape[2] == 3:

|

| 84 |

+

new_image = new_image[:, :, ::-1]

|

| 85 |

+

elif new_image.shape[2] == 4:

|

| 86 |

+

new_image = new_image[:, :, [2, 1, 0, 3]]

|

| 87 |

+

return new_image

|

| 88 |

+

|

| 89 |

+

def cv2pil(image):

|

| 90 |

+

new_image = image.copy()

|

| 91 |

+

if new_image.ndim == 2:

|

| 92 |

+

pass

|

| 93 |

+

elif new_image.shape[2] == 3:

|

| 94 |

+

new_image = new_image[:, :, ::-1]

|

| 95 |

+

elif new_image.shape[2] == 4:

|

| 96 |

+

new_image = new_image[:, :, [2, 1, 0, 3]]

|

| 97 |

+

new_image = Image.fromarray(new_image)

|

| 98 |

+

return new_image

|

| 99 |

+

|

| 100 |

+

|

| 101 |

+

|

| 102 |

+

|

output/output.txt

ADDED

|

File without changes

|

requirements.txt

ADDED

|

@@ -0,0 +1,11 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

opencv-python==4.7.0.68

|

| 2 |

+

pandas==1.5.3

|

| 3 |

+

gradio==3.16.2

|

| 4 |

+

scikit-learn==1.2.1

|

| 5 |

+

scikit-image==0.19.3

|

| 6 |

+

Pillow==9.4.0

|

| 7 |

+

tqdm==4.63.0

|

| 8 |

+

diffusers==0.27.2

|

| 9 |

+

gradio==3.16.2

|

| 10 |

+

gradio_client==0.2.5

|

| 11 |

+

|

sd_model.py

ADDED

|

@@ -0,0 +1,64 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from diffusers import StableDiffusionControlNetPipeline, ControlNetModel, UniPCMultistepScheduler

|

| 2 |

+

from diffusers import StableDiffusionXLControlNetPipeline, ControlNetModel, AutoencoderKL

|

| 3 |

+

import torch

|

| 4 |

+

|

| 5 |

+

device = "cuda"

|

| 6 |

+

|

| 7 |

+

def get_cn_pipeline():

|

| 8 |

+

controlnets = [

|

| 9 |

+

ControlNetModel.from_pretrained("./controlnet/lineart", torch_dtype=torch.float16, use_safetensors=True),

|

| 10 |

+

ControlNetModel.from_pretrained("mattyamonaca/controlnet_line2line_xl", torch_dtype=torch.float16)

|

| 11 |

+

]

|

| 12 |

+

|

| 13 |

+

vae = AutoencoderKL.from_pretrained("madebyollin/sdxl-vae-fp16-fix", torch_dtype=torch.float16)

|

| 14 |

+

pipe = StableDiffusionXLControlNetPipeline.from_pretrained(

|

| 15 |

+

"cagliostrolab/animagine-xl-3.1", controlnet=controlnets, vae=vae, torch_dtype=torch.float16

|

| 16 |

+

)

|

| 17 |

+

|

| 18 |

+

pipe.enable_model_cpu_offload()

|

| 19 |

+

|

| 20 |

+

#if pipe.safety_checker is not None:

|

| 21 |

+

# pipe.safety_checker = lambda images, **kwargs: (images, [False])

|

| 22 |

+

|

| 23 |

+

#pipe.scheduler = UniPCMultistepScheduler.from_config(pipe.scheduler.config)

|

| 24 |

+

#pipe.to(device)

|

| 25 |

+

|

| 26 |

+

return pipe

|

| 27 |

+

|

| 28 |

+

def invert_image(img):

|

| 29 |

+

# 画像を読み込む

|

| 30 |

+

# 画像をグレースケールに変換(もしもともと白黒でない場合)

|

| 31 |

+

img = img.convert('L')

|

| 32 |

+

# 画像の各ピクセルを反転

|

| 33 |

+

inverted_img = img.point(lambda p: 255 - p)

|

| 34 |

+

# 反転した画像を保存

|

| 35 |

+

return inverted_img

|

| 36 |

+

|

| 37 |

+

|

| 38 |

+

def get_cn_detector(image):

|

| 39 |

+

#lineart_anime = LineartAnimeDetector.from_pretrained("lllyasviel/Annotators")

|

| 40 |

+

#canny = CannyDetector()

|

| 41 |

+

#lineart_anime_img = lineart_anime(image)

|

| 42 |

+

#canny_img = canny(image)

|

| 43 |

+

#canny_img = canny_img.resize((lineart_anime(image).width, lineart_anime(image).height))

|

| 44 |

+

re_image = invert_image(image)

|

| 45 |

+

|

| 46 |

+

|

| 47 |

+

detectors = [re_image, image]

|

| 48 |

+

print(detectors)

|

| 49 |

+

return detectors

|

| 50 |

+

|

| 51 |

+

def generate(pipe, detectors, prompt, negative_prompt):

|

| 52 |

+

default_pos = "1girl, bestquality, 4K, ((white background)), no background"

|

| 53 |

+

default_neg = "shadow, (worst quality, low quality:1.2), (lowres:1.2), (bad anatomy:1.2), (greyscale, monochrome:1.4)"

|

| 54 |

+

prompt = default_pos + prompt

|

| 55 |

+

negative_prompt = default_neg + negative_prompt

|

| 56 |

+

print(type(pipe))

|

| 57 |

+

image = pipe(

|

| 58 |

+

prompt=prompt,

|

| 59 |

+

negative_prompt = negative_prompt,

|

| 60 |

+

image=detectors,

|

| 61 |

+

num_inference_steps=50,

|

| 62 |

+

controlnet_conditioning_scale=[1.0, 0.2],

|

| 63 |

+

).images[0]

|

| 64 |

+

return image

|

starline.py

ADDED

|

@@ -0,0 +1,268 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from PIL import Image, ImageFilter

|

| 2 |

+

from collections import defaultdict

|

| 3 |

+

from skimage import color as sk_color

|

| 4 |

+

from PIL import Image

|

| 5 |

+

from tqdm import tqdm

|

| 6 |

+

from skimage.color import deltaE_ciede2000, rgb2lab

|

| 7 |

+

import cv2

|

| 8 |

+

import numpy as np

|

| 9 |

+

|

| 10 |

+

|

| 11 |

+

def replace_color(image, color_1, color_2, alpha_np):

|

| 12 |

+

# 画像データを配列に変換

|

| 13 |

+

data = np.array(image)

|

| 14 |

+

|

| 15 |

+

# RGBAモードの画像であるため、形状変更時に4チャネルを考慮

|

| 16 |

+

original_shape = data.shape

|

| 17 |

+

data = data.reshape(-1, 4) # RGBAのため、4チャネルでフラット化

|

| 18 |

+

|

| 19 |

+

# color_1のマッチングを検索する際にはRGB値のみを比較

|

| 20 |

+

matches = np.all(data[:, :3] == color_1, axis=1)

|

| 21 |

+

|

| 22 |

+

# 変更を追跡するためのフラグ

|

| 23 |

+

nochange_count = 0

|

| 24 |

+

idx = 0

|

| 25 |

+

|

| 26 |

+

while np.any(matches):

|

| 27 |

+

idx += 1

|

| 28 |

+

new_matches = np.zeros_like(matches)

|

| 29 |

+

match_num = np.sum(matches)

|

| 30 |

+

for i in tqdm(range(len(data))):

|

| 31 |

+

if matches[i]:

|

| 32 |

+

x, y = divmod(i, original_shape[1])

|

| 33 |

+

neighbors = [

|

| 34 |

+

(x-1, y), (x+1, y), (x, y-1), (x, y+1) # 上下左右

|

| 35 |

+

]

|

| 36 |

+

replacement_found = False

|

| 37 |

+

for nx, ny in neighbors:

|

| 38 |

+

if 0 <= nx < original_shape[0] and 0 <= ny < original_shape[1]:

|

| 39 |

+

ni = nx * original_shape[1] + ny

|

| 40 |

+

# RGBのみ比較し、アルファは無視

|

| 41 |

+

if not np.all(data[ni, :3] == color_1, axis=0) and not np.all(data[ni, :3] == color_2, axis=0):

|

| 42 |

+

data[i, :3] = data[ni, :3] # RGB値のみ更新

|

| 43 |

+

replacement_found = True

|

| 44 |

+

continue

|

| 45 |

+

if not replacement_found:

|

| 46 |

+

new_matches[i] = True

|

| 47 |

+

matches = new_matches

|

| 48 |

+

if match_num == np.sum(matches):

|

| 49 |

+

nochange_count += 1

|

| 50 |

+

if nochange_count > 5:

|

| 51 |

+

break

|

| 52 |

+

|

| 53 |

+

# 最終的な画像をPIL形式で返す

|

| 54 |

+

data = data.reshape(original_shape)

|

| 55 |

+

data[:, :, 3] = 255 - alpha_np

|

| 56 |

+

return Image.fromarray(data, 'RGBA')

|

| 57 |

+

|

| 58 |

+

def recolor_lineart_and_composite(lineart_image, base_image, new_color, alpha_th):

|

| 59 |

+

"""

|

| 60 |

+

Recolor an RGBA lineart image to a single new color while preserving alpha, and composite it over a base image.

|

| 61 |

+

|

| 62 |

+

Args:

|

| 63 |

+

lineart_image (PIL.Image): The lineart image with RGBA channels.

|

| 64 |

+

base_image (PIL.Image): The base image to composite onto.

|

| 65 |

+

new_color (tuple): The new RGB color for the lineart (e.g., (255, 0, 0) for red).

|

| 66 |

+

|

| 67 |

+

Returns:

|

| 68 |

+

PIL.Image: The composited image with the recolored lineart on top.

|

| 69 |

+

"""

|

| 70 |

+

# Ensure images are in RGBA mode

|

| 71 |

+

if lineart_image.mode != 'RGBA':

|

| 72 |

+

lineart_image = lineart_image.convert('RGBA')

|

| 73 |

+

if base_image.mode != 'RGBA':

|

| 74 |

+

base_image = base_image.convert('RGBA')

|

| 75 |

+

|

| 76 |

+

# Extract the alpha channel from the lineart image

|

| 77 |

+

r, g, b, alpha = lineart_image.split()

|

| 78 |

+

|

| 79 |

+

alpha_np = np.array(alpha)

|

| 80 |

+

alpha_np[alpha_np < alpha_th] = 0

|

| 81 |

+

alpha_np[alpha_np >= alpha_th] = 255

|

| 82 |

+

|

| 83 |

+

new_alpha = Image.fromarray(alpha_np)

|

| 84 |

+

|

| 85 |

+

# Create a new image using the new color and the alpha channel from the original lineart

|

| 86 |

+

new_lineart_image = Image.merge('RGBA', (

|

| 87 |

+

Image.new('L', lineart_image.size, int(new_color[0])),

|

| 88 |

+

Image.new('L', lineart_image.size, int(new_color[1])),

|

| 89 |

+

Image.new('L', lineart_image.size, int(new_color[2])),

|

| 90 |

+

new_alpha

|

| 91 |

+

))

|

| 92 |

+

|

| 93 |

+

# Composite the new lineart image over the base image

|

| 94 |

+

composite_image = Image.alpha_composite(base_image, new_lineart_image)

|

| 95 |

+

|

| 96 |

+

return composite_image, alpha_np

|

| 97 |

+

|

| 98 |

+

|

| 99 |

+

def thicken_and_recolor_lines(base_image, lineart, thickness=3, new_color=(0, 0, 0)):

|

| 100 |

+

"""

|

| 101 |

+

Thicken the lines of a lineart image, recolor them, and composite onto another image,

|

| 102 |

+

while preserving the transparency of the original lineart.

|

| 103 |

+

|

| 104 |

+

Args:

|

| 105 |

+

base_image (PIL.Image): The base image to composite onto.

|

| 106 |

+

lineart (PIL.Image): The lineart image with transparent background.

|

| 107 |

+

thickness (int): The desired thickness of the lines.

|

| 108 |

+

new_color (tuple): The new color to apply to the lines (R, G, B).

|

| 109 |

+

|

| 110 |

+

Returns:

|

| 111 |

+

PIL.Image: The image with the recolored and thickened lineart composited on top.

|

| 112 |

+

"""

|

| 113 |

+

# Ensure both images are in RGBA format

|

| 114 |

+

if base_image.mode != 'RGBA':

|

| 115 |

+

base_image = base_image.convert('RGBA')

|

| 116 |

+

if lineart.mode != 'RGB':

|

| 117 |

+

lineart = lineart.convert('RGBA')

|

| 118 |

+

|

| 119 |

+

# Convert the lineart image to OpenCV format

|

| 120 |

+

lineart_cv = np.array(lineart)

|

| 121 |

+

|

| 122 |

+

white_pixels = np.sum(lineart_cv == 255)

|

| 123 |

+

black_pixels = np.sum(lineart_cv == 0)

|

| 124 |

+

|

| 125 |