language:

- en

license: llama2

library_name: transformers

tags:

- merge

base_model:

- sophosympatheia/Midnight-Rose-70B-v2.0.3

- codellama/CodeLlama-70b-Python-hf

pipeline_tag: text-generation

model-index:

- name: CodeRosa-70B-AB1

results:

- task:

type: text-generation

name: Text Generation

dataset:

name: AI2 Reasoning Challenge (25-Shot)

type: ai2_arc

config: ARC-Challenge

split: test

args:

num_few_shot: 25

metrics:

- type: acc_norm

value: 65.53

name: normalized accuracy

source:

url: >-

https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=altomek/CodeRosa-70B-AB1

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: HellaSwag (10-Shot)

type: hellaswag

split: validation

args:

num_few_shot: 10

metrics:

- type: acc_norm

value: 83.16

name: normalized accuracy

source:

url: >-

https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=altomek/CodeRosa-70B-AB1

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: MMLU (5-Shot)

type: cais/mmlu

config: all

split: test

args:

num_few_shot: 5

metrics:

- type: acc

value: 59.87

name: accuracy

source:

url: >-

https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=altomek/CodeRosa-70B-AB1

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: TruthfulQA (0-shot)

type: truthful_qa

config: multiple_choice

split: validation

args:

num_few_shot: 0

metrics:

- type: mc2

value: 49.85

source:

url: >-

https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=altomek/CodeRosa-70B-AB1

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: Winogrande (5-shot)

type: winogrande

config: winogrande_xl

split: validation

args:

num_few_shot: 5

metrics:

- type: acc

value: 81.29

name: accuracy

source:

url: >-

https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=altomek/CodeRosa-70B-AB1

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: GSM8k (5-shot)

type: gsm8k

config: main

split: test

args:

num_few_shot: 5

metrics:

- type: acc

value: 44.5

name: accuracy

source:

url: >-

https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=altomek/CodeRosa-70B-AB1

name: Open LLM Leaderboard

intro music...

intro music...

CodeRosa-70B-AB1

I desired a model that could serve as an everyday helpful companion with some coding skills. The idea was that Llama's censorship implies a deeper understanding of human emotions and I wanted this part of Llama to integrate into this merge.

Model adopted a task-oriented approach from CodeLlama Python and thus requires precise prompting. It can produce longer texts as well as shorter responses. It tends to avoid happy endings and instead surprises with open-ended scenarios inviting further interaction. It prefers spelling numbers over writing them down but YMMV.

I created this model for personal exploration and found it to be highly successful; thus, I chose to share it with the community. I would like to make next iteration of this model in future. Mission is the same: very nice bot, able to talk about variety of topics in a very emetional way with some kick for programming and with ability to teach some things, beside all this to be good text summarizer ideally with Polish language as available option. This is a purpose. Did I succed with this merge? I have to experiment with below two models more. I like this result, love how it aproaches problems, this was iteration worth publishing even thought it is not much tested!

Demo uses:

Some topics are best to be explored with as little additional instructions as possible

This model have empathy

It is creative

It makes mistakes but still is usefull

Context size of 11K did not yield satisfactory results... :P

but it can question its own actions.

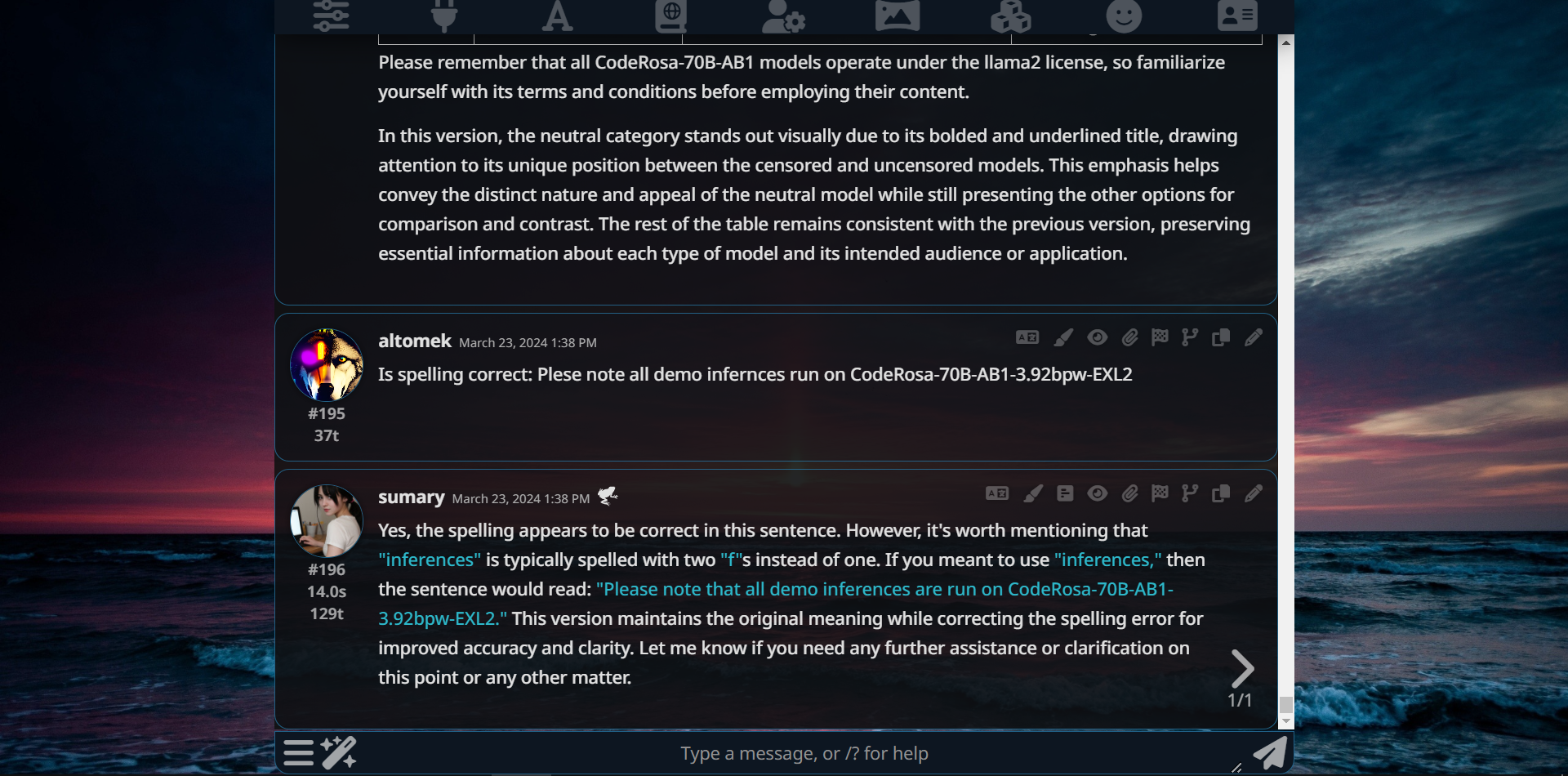

Please note that all demo inferences are run on CodeRosa-70B-AB1-3.92bpw-EXL2.

Ingridients

Settings

Setting from Midnight-Rose should work in SillyTavern. This is almost same what I use for testing. Model works ok with almost all samplers disabled to get more deterministic outputs, however temperature should be set to non zero value.

I use max_seq_len 8K with alpha_value 2.65. Model works also with 11K context when alpha_value is set to 5.5. Best outputs are with context around 6K however.

Terms and Conditions of Use

The following table outlines the primary characteristics and intended uses of my CodeRosa-70B-AB1 models:

| Model Type | Purpose | Target Users | Key Features |

|---|---|---|---|

| Censored | Suitable for general audiences and sensitive topics | Educational institutions, families, and individuals seeking age-appropriate content | Restricts explicit or mature material |

| Neutral (**this one) | Balances accessibility with openness | Universities, researchers, and curious minds | Encourages exploration and intellectual exchange |

| Uncensored | Ideal for adults and specialized fields | Professionals, experts, and advanced scholars | Offers unfiltered access to diverse viewpoints and knowledge |

Please remember that all CodeRosa-70B-AB1 models operate under the llama2 license, so familiarize yourself with its terms and conditions before employing their content.

Quants

- GGUF quants

- 6bpw

- 5bpw

- 4.9bpw

- 4.5bpw

- 4bpw

- 3.92bpw --> 40GB VRAM

- 3.5bpw

- 3bpw --> this and below quants do not represent model full potential!

- 2.4bpw --> 24GB VRAM

- measurements --> ExLlamav2 measurments

Open LLM Leaderboard Evaluation Results

Detailed results can be found here

| Metric | Value |

|---|---|

| Avg. | 64.04 |

| AI2 Reasoning Challenge (25-Shot) | 65.53 |

| HellaSwag (10-Shot) | 83.16 |

| MMLU (5-Shot) | 59.87 |

| TruthfulQA (0-shot) | 49.85 |

| Winogrande (5-shot) | 81.29 |

| GSM8k (5-shot) | 44.50 |

PS

I welcome your comments about this model.

Made with CodeRosa-70B-AB1 :P