text

stringlengths 23

371k

| source

stringlengths 32

152

|

|---|---|

!---

Copyright 2022 The HuggingFace Team. All rights reserved.

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

⚠️ Note that this file is in Markdown but contain specific syntax for our doc-builder (similar to MDX) that may not be

rendered properly in your Markdown viewer.

-->

# Installation

Install 🤗 Transformers for whichever deep learning library you're working with, setup your cache, and optionally configure 🤗 Transformers to run offline.

🤗 Transformers is tested on Python 3.6+, PyTorch 1.1.0+, TensorFlow 2.0+, and Flax. Follow the installation instructions below for the deep learning library you are using:

* [PyTorch](https://pytorch.org/get-started/locally/) installation instructions.

* [TensorFlow 2.0](https://www.tensorflow.org/install/pip) installation instructions.

* [Flax](https://flax.readthedocs.io/en/latest/) installation instructions.

## Install with pip

You should install 🤗 Transformers in a [virtual environment](https://docs.python.org/3/library/venv.html). If you're unfamiliar with Python virtual environments, take a look at this [guide](https://packaging.python.org/guides/installing-using-pip-and-virtual-environments/). A virtual environment makes it easier to manage different projects, and avoid compatibility issues between dependencies.

Start by creating a virtual environment in your project directory:

```bash

python -m venv .env

```

Activate the virtual environment. On Linux and MacOs:

```bash

source .env/bin/activate

```

Activate Virtual environment on Windows

```bash

.env/Scripts/activate

```

Now you're ready to install 🤗 Transformers with the following command:

```bash

pip install transformers

```

For CPU-support only, you can conveniently install 🤗 Transformers and a deep learning library in one line. For example, install 🤗 Transformers and PyTorch with:

```bash

pip install 'transformers[torch]'

```

🤗 Transformers and TensorFlow 2.0:

```bash

pip install 'transformers[tf-cpu]'

```

<Tip warning={true}>

M1 / ARM Users

You will need to install the following before installing TensorFLow 2.0

```

brew install cmake

brew install pkg-config

```

</Tip>

🤗 Transformers and Flax:

```bash

pip install 'transformers[flax]'

```

Finally, check if 🤗 Transformers has been properly installed by running the following command. It will download a pretrained model:

```bash

python -c "from transformers import pipeline; print(pipeline('sentiment-analysis')('we love you'))"

```

Then print out the label and score:

```bash

[{'label': 'POSITIVE', 'score': 0.9998704791069031}]

```

## Install from source

Install 🤗 Transformers from source with the following command:

```bash

pip install git+https://github.com/huggingface/transformers

```

This command installs the bleeding edge `main` version rather than the latest `stable` version. The `main` version is useful for staying up-to-date with the latest developments. For instance, if a bug has been fixed since the last official release but a new release hasn't been rolled out yet. However, this means the `main` version may not always be stable. We strive to keep the `main` version operational, and most issues are usually resolved within a few hours or a day. If you run into a problem, please open an [Issue](https://github.com/huggingface/transformers/issues) so we can fix it even sooner!

Check if 🤗 Transformers has been properly installed by running the following command:

```bash

python -c "from transformers import pipeline; print(pipeline('sentiment-analysis')('I love you'))"

```

## Editable install

You will need an editable install if you'd like to:

* Use the `main` version of the source code.

* Contribute to 🤗 Transformers and need to test changes in the code.

Clone the repository and install 🤗 Transformers with the following commands:

```bash

git clone https://github.com/huggingface/transformers.git

cd transformers

pip install -e .

```

These commands will link the folder you cloned the repository to and your Python library paths. Python will now look inside the folder you cloned to in addition to the normal library paths. For example, if your Python packages are typically installed in `~/anaconda3/envs/main/lib/python3.7/site-packages/`, Python will also search the folder you cloned to: `~/transformers/`.

<Tip warning={true}>

You must keep the `transformers` folder if you want to keep using the library.

</Tip>

Now you can easily update your clone to the latest version of 🤗 Transformers with the following command:

```bash

cd ~/transformers/

git pull

```

Your Python environment will find the `main` version of 🤗 Transformers on the next run.

## Install with conda

Install from the conda channel `huggingface`:

```bash

conda install -c huggingface transformers

```

## Cache setup

Pretrained models are downloaded and locally cached at: `~/.cache/huggingface/hub`. This is the default directory given by the shell environment variable `TRANSFORMERS_CACHE`. On Windows, the default directory is given by `C:\Users\username\.cache\huggingface\hub`. You can change the shell environment variables shown below - in order of priority - to specify a different cache directory:

1. Shell environment variable (default): `HUGGINGFACE_HUB_CACHE` or `TRANSFORMERS_CACHE`.

2. Shell environment variable: `HF_HOME`.

3. Shell environment variable: `XDG_CACHE_HOME` + `/huggingface`.

<Tip>

🤗 Transformers will use the shell environment variables `PYTORCH_TRANSFORMERS_CACHE` or `PYTORCH_PRETRAINED_BERT_CACHE` if you are coming from an earlier iteration of this library and have set those environment variables, unless you specify the shell environment variable `TRANSFORMERS_CACHE`.

</Tip>

## Offline mode

Run 🤗 Transformers in a firewalled or offline environment with locally cached files by setting the environment variable `TRANSFORMERS_OFFLINE=1`.

<Tip>

Add [🤗 Datasets](https://huggingface.co/docs/datasets/) to your offline training workflow with the environment variable `HF_DATASETS_OFFLINE=1`.

</Tip>

```bash

HF_DATASETS_OFFLINE=1 TRANSFORMERS_OFFLINE=1 \

python examples/pytorch/translation/run_translation.py --model_name_or_path t5-small --dataset_name wmt16 --dataset_config ro-en ...

```

This script should run without hanging or waiting to timeout because it won't attempt to download the model from the Hub.

You can also bypass loading a model from the Hub from each [`~PreTrainedModel.from_pretrained`] call with the [`local_files_only`] parameter. When set to `True`, only local files are loaded:

```py

from transformers import T5Model

model = T5Model.from_pretrained("./path/to/local/directory", local_files_only=True)

```

### Fetch models and tokenizers to use offline

Another option for using 🤗 Transformers offline is to download the files ahead of time, and then point to their local path when you need to use them offline. There are three ways to do this:

* Download a file through the user interface on the [Model Hub](https://huggingface.co/models) by clicking on the ↓ icon.

* Use the [`PreTrainedModel.from_pretrained`] and [`PreTrainedModel.save_pretrained`] workflow:

1. Download your files ahead of time with [`PreTrainedModel.from_pretrained`]:

```py

>>> from transformers import AutoTokenizer, AutoModelForSeq2SeqLM

>>> tokenizer = AutoTokenizer.from_pretrained("bigscience/T0_3B")

>>> model = AutoModelForSeq2SeqLM.from_pretrained("bigscience/T0_3B")

```

2. Save your files to a specified directory with [`PreTrainedModel.save_pretrained`]:

```py

>>> tokenizer.save_pretrained("./your/path/bigscience_t0")

>>> model.save_pretrained("./your/path/bigscience_t0")

```

3. Now when you're offline, reload your files with [`PreTrainedModel.from_pretrained`] from the specified directory:

```py

>>> tokenizer = AutoTokenizer.from_pretrained("./your/path/bigscience_t0")

>>> model = AutoModel.from_pretrained("./your/path/bigscience_t0")

```

* Programmatically download files with the [huggingface_hub](https://github.com/huggingface/huggingface_hub/tree/main/src/huggingface_hub) library:

1. Install the `huggingface_hub` library in your virtual environment:

```bash

python -m pip install huggingface_hub

```

2. Use the [`hf_hub_download`](https://huggingface.co/docs/hub/adding-a-library#download-files-from-the-hub) function to download a file to a specific path. For example, the following command downloads the `config.json` file from the [T0](https://huggingface.co/bigscience/T0_3B) model to your desired path:

```py

>>> from huggingface_hub import hf_hub_download

>>> hf_hub_download(repo_id="bigscience/T0_3B", filename="config.json", cache_dir="./your/path/bigscience_t0")

```

Once your file is downloaded and locally cached, specify it's local path to load and use it:

```py

>>> from transformers import AutoConfig

>>> config = AutoConfig.from_pretrained("./your/path/bigscience_t0/config.json")

```

<Tip>

See the [How to download files from the Hub](https://huggingface.co/docs/hub/how-to-downstream) section for more details on downloading files stored on the Hub.

</Tip>

| huggingface/transformers/blob/main/docs/source/en/installation.md |

!--Copyright 2021 The HuggingFace Team. All rights reserved.

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

specific language governing permissions and limitations under the License.

⚠️ Note that this file is in Markdown but contain specific syntax for our doc-builder (similar to MDX) that may not be

rendered properly in your Markdown viewer.

-->

# GPT Neo

## Overview

The GPTNeo model was released in the [EleutherAI/gpt-neo](https://github.com/EleutherAI/gpt-neo) repository by Sid

Black, Stella Biderman, Leo Gao, Phil Wang and Connor Leahy. It is a GPT2 like causal language model trained on the

[Pile](https://pile.eleuther.ai/) dataset.

The architecture is similar to GPT2 except that GPT Neo uses local attention in every other layer with a window size of

256 tokens.

This model was contributed by [valhalla](https://huggingface.co/valhalla).

## Usage example

The `generate()` method can be used to generate text using GPT Neo model.

```python

>>> from transformers import GPTNeoForCausalLM, GPT2Tokenizer

>>> model = GPTNeoForCausalLM.from_pretrained("EleutherAI/gpt-neo-1.3B")

>>> tokenizer = GPT2Tokenizer.from_pretrained("EleutherAI/gpt-neo-1.3B")

>>> prompt = (

... "In a shocking finding, scientists discovered a herd of unicorns living in a remote, "

... "previously unexplored valley, in the Andes Mountains. Even more surprising to the "

... "researchers was the fact that the unicorns spoke perfect English."

... )

>>> input_ids = tokenizer(prompt, return_tensors="pt").input_ids

>>> gen_tokens = model.generate(

... input_ids,

... do_sample=True,

... temperature=0.9,

... max_length=100,

... )

>>> gen_text = tokenizer.batch_decode(gen_tokens)[0]

```

## Combining GPT-Neo and Flash Attention 2

First, make sure to install the latest version of Flash Attention 2 to include the sliding window attention feature, and make sure your hardware is compatible with Flash-Attention 2. More details are available [here](https://huggingface.co/docs/transformers/perf_infer_gpu_one#flashattention-2) concerning the installation.

Make sure as well to load your model in half-precision (e.g. `torch.float16`).

To load and run a model using Flash Attention 2, refer to the snippet below:

```python

>>> import torch

>>> from transformers import AutoModelForCausalLM, AutoTokenizer

>>> device = "cuda" # the device to load the model onto

>>> model = AutoModelForCausalLM.from_pretrained("EleutherAI/gpt-neo-2.7B", torch_dtype=torch.float16, attn_implementation="flash_attention_2")

>>> tokenizer = AutoTokenizer.from_pretrained("EleutherAI/gpt-neo-2.7B")

>>> prompt = "def hello_world():"

>>> model_inputs = tokenizer([prompt], return_tensors="pt").to(device)

>>> model.to(device)

>>> generated_ids = model.generate(**model_inputs, max_new_tokens=100, do_sample=True)

>>> tokenizer.batch_decode(generated_ids)[0]

"def hello_world():\n >>> run_script("hello.py")\n >>> exit(0)\n<|endoftext|>"

```

### Expected speedups

Below is an expected speedup diagram that compares pure inference time between the native implementation in transformers using `EleutherAI/gpt-neo-2.7B` checkpoint and the Flash Attention 2 version of the model.

Note that for GPT-Neo it is not possible to train / run on very long context as the max [position embeddings](https://huggingface.co/EleutherAI/gpt-neo-2.7B/blob/main/config.json#L58 ) is limited to 2048 - but this is applicable to all gpt-neo models and not specific to FA-2

<div style="text-align: center">

<img src="https://user-images.githubusercontent.com/49240599/272241893-b1c66b75-3a48-4265-bc47-688448568b3d.png">

</div>

## Resources

- [Text classification task guide](../tasks/sequence_classification)

- [Causal language modeling task guide](../tasks/language_modeling)

## GPTNeoConfig

[[autodoc]] GPTNeoConfig

<frameworkcontent>

<pt>

## GPTNeoModel

[[autodoc]] GPTNeoModel

- forward

## GPTNeoForCausalLM

[[autodoc]] GPTNeoForCausalLM

- forward

## GPTNeoForQuestionAnswering

[[autodoc]] GPTNeoForQuestionAnswering

- forward

## GPTNeoForSequenceClassification

[[autodoc]] GPTNeoForSequenceClassification

- forward

## GPTNeoForTokenClassification

[[autodoc]] GPTNeoForTokenClassification

- forward

</pt>

<jax>

## FlaxGPTNeoModel

[[autodoc]] FlaxGPTNeoModel

- __call__

## FlaxGPTNeoForCausalLM

[[autodoc]] FlaxGPTNeoForCausalLM

- __call__

</jax>

</frameworkcontent>

| huggingface/transformers/blob/main/docs/source/en/model_doc/gpt_neo.md |

Gradio Demo: rows_and_columns

```

!pip install -q gradio

```

```

# Downloading files from the demo repo

import os

os.mkdir('images')

!wget -q -O images/cheetah.jpg https://github.com/gradio-app/gradio/raw/main/demo/rows_and_columns/images/cheetah.jpg

```

```

import gradio as gr

with gr.Blocks() as demo:

with gr.Row():

text1 = gr.Textbox(label="t1")

slider2 = gr.Textbox(label="s2")

drop3 = gr.Dropdown(["a", "b", "c"], label="d3")

with gr.Row():

with gr.Column(scale=1, min_width=600):

text1 = gr.Textbox(label="prompt 1")

text2 = gr.Textbox(label="prompt 2")

inbtw = gr.Button("Between")

text4 = gr.Textbox(label="prompt 1")

text5 = gr.Textbox(label="prompt 2")

with gr.Column(scale=2, min_width=600):

img1 = gr.Image("images/cheetah.jpg")

btn = gr.Button("Go")

if __name__ == "__main__":

demo.launch()

```

| gradio-app/gradio/blob/main/demo/rows_and_columns/run.ipynb |

Gradio Demo: lineplot_component

```

!pip install -q gradio vega_datasets

```

```

import gradio as gr

from vega_datasets import data

with gr.Blocks() as demo:

gr.LinePlot(

data.stocks(),

x="date",

y="price",

color="symbol",

color_legend_position="bottom",

title="Stock Prices",

tooltip=["date", "price", "symbol"],

height=300,

width=300,

container=False,

)

if __name__ == "__main__":

demo.launch()

```

| gradio-app/gradio/blob/main/demo/lineplot_component/run.ipynb |

@gradio/box

## 0.1.6

### Patch Changes

- Updated dependencies [[`73268ee`](https://github.com/gradio-app/gradio/commit/73268ee2e39f23ebdd1e927cb49b8d79c4b9a144)]:

- @gradio/atoms@0.4.1

## 0.1.5

### Patch Changes

- Updated dependencies [[`4d1cbbc`](https://github.com/gradio-app/gradio/commit/4d1cbbcf30833ef1de2d2d2710c7492a379a9a00)]:

- @gradio/atoms@0.4.0

## 0.1.4

### Patch Changes

- Updated dependencies []:

- @gradio/atoms@0.3.1

## 0.1.3

### Patch Changes

- Updated dependencies [[`9caddc17b`](https://github.com/gradio-app/gradio/commit/9caddc17b1dea8da1af8ba724c6a5eab04ce0ed8)]:

- @gradio/atoms@0.3.0

## 0.1.2

### Patch Changes

- Updated dependencies [[`f816136a0`](https://github.com/gradio-app/gradio/commit/f816136a039fa6011be9c4fb14f573e4050a681a)]:

- @gradio/atoms@0.2.2

## 0.1.1

### Patch Changes

- Updated dependencies [[`3cdeabc68`](https://github.com/gradio-app/gradio/commit/3cdeabc6843000310e1a9e1d17190ecbf3bbc780), [`fad92c29d`](https://github.com/gradio-app/gradio/commit/fad92c29dc1f5cd84341aae417c495b33e01245f)]:

- @gradio/atoms@0.2.1

## 0.1.0

### Features

- [#5498](https://github.com/gradio-app/gradio/pull/5498) [`287fe6782`](https://github.com/gradio-app/gradio/commit/287fe6782825479513e79a5cf0ba0fbfe51443d7) - Publish all components to npm. Thanks [@pngwn](https://github.com/pngwn)!

## 0.1.0-beta.7

### Patch Changes

- Updated dependencies [[`667802a6c`](https://github.com/gradio-app/gradio/commit/667802a6cdbfb2ce454a3be5a78e0990b194548a), [`c476bd5a5`](https://github.com/gradio-app/gradio/commit/c476bd5a5b70836163b9c69bf4bfe068b17fbe13)]:

- @gradio/atoms@0.2.0-beta.6

## 0.1.0-beta.6

### Features

- [#6016](https://github.com/gradio-app/gradio/pull/6016) [`83e947676`](https://github.com/gradio-app/gradio/commit/83e947676d327ca2ab6ae2a2d710c78961c771a0) - Format js in v4 branch. Thanks [@freddyaboulton](https://github.com/freddyaboulton)!

## 0.1.0-beta.5

### Features

- [#5960](https://github.com/gradio-app/gradio/pull/5960) [`319c30f3f`](https://github.com/gradio-app/gradio/commit/319c30f3fccf23bfe1da6c9b132a6a99d59652f7) - rererefactor frontend files. Thanks [@pngwn](https://github.com/pngwn)!

- [#5938](https://github.com/gradio-app/gradio/pull/5938) [`13ed8a485`](https://github.com/gradio-app/gradio/commit/13ed8a485d5e31d7d75af87fe8654b661edcca93) - V4: Use beta release versions for '@gradio' packages. Thanks [@freddyaboulton](https://github.com/freddyaboulton)!

- [#5949](https://github.com/gradio-app/gradio/pull/5949) [`1c390f101`](https://github.com/gradio-app/gradio/commit/1c390f10199142a41722ba493a0c86b58245da15) - Merge main again. Thanks [@pngwn](https://github.com/pngwn)!

## 0.0.7

### Patch Changes

- Updated dependencies [[`e70805d54`](https://github.com/gradio-app/gradio/commit/e70805d54cc792452545f5d8eccc1aa0212a4695)]:

- @gradio/atoms@0.2.0

## 0.0.6

### Patch Changes

- Updated dependencies []:

- @gradio/atoms@0.1.4

## 0.0.5

### Patch Changes

- Updated dependencies []:

- @gradio/atoms@0.1.3

## 0.0.4

### Patch Changes

- Updated dependencies []:

- @gradio/atoms@0.1.2

## 0.0.3

### Patch Changes

- Updated dependencies []:

- @gradio/atoms@0.1.1

## 0.0.2

### Features

- [#5215](https://github.com/gradio-app/gradio/pull/5215) [`fbdad78a`](https://github.com/gradio-app/gradio/commit/fbdad78af4c47454cbb570f88cc14bf4479bbceb) - Lazy load interactive or static variants of a component individually, rather than loading both variants regardless. This change will improve performance for many applications. Thanks [@pngwn](https://github.com/pngwn)!

| gradio-app/gradio/blob/main/js/box/CHANGELOG.md |

FrameworkSwitchCourse {fw} />

<!-- DISABLE-FRONTMATTER-SECTIONS -->

# End-of-chapter quiz[[end-of-chapter-quiz]]

<CourseFloatingBanner

chapter={4}

classNames="absolute z-10 right-0 top-0"

/>

Let's test what you learned in this chapter!

### 1. What are models on the Hub limited to?

<Question

choices={[

{

text: "Models from the 🤗 Transformers library.",

explain: "While models from the 🤗 Transformers library are supported on the Hugging Face Hub, they're not the only ones!"

},

{

text: "All models with a similar interface to 🤗 Transformers.",

explain: "No interface requirement is set when uploading models to the Hugging Face Hub. "

},

{

text: "There are no limits.",

explain: "Right! There are no limits when uploading models to the Hub.",

correct: true

},

{

text: "Models that are in some way related to NLP.",

explain: "No requirement is set regarding the field of application!"

}

]}

/>

### 2. How can you manage models on the Hub?

<Question

choices={[

{

text: "Through a GCP account.",

explain: "Incorrect!"

},

{

text: "Through peer-to-peer distribution.",

explain: "Incorrect!"

},

{

text: "Through git and git-lfs.",

explain: "Correct! Models on the Hub are simple Git repositories, leveraging <code>git-lfs</code> for large files.",

correct: true

}

]}

/>

### 3. What can you do using the Hugging Face Hub web interface?

<Question

choices={[

{

text: "Fork an existing repository.",

explain: "Forking a repository is not possible on the Hugging Face Hub."

},

{

text: "Create a new model repository.",

explain: "Correct! That's not all you can do, though.",

correct: true

},

{

text: "Manage and edit files.",

explain: "Correct! That's not the only right answer, though.",

correct: true

},

{

text: "Upload files.",

explain: "Right! But that's not all.",

correct: true

},

{

text: "See diffs across versions.",

explain: "Correct! That's not all you can do, though.",

correct: true

}

]}

/>

### 4. What is a model card?

<Question

choices={[

{

text: "A rough description of the model, therefore less important than the model and tokenizer files.",

explain: "It is indeed a description of the model, but it's an important piece: if it's incomplete or absent the model's utility is drastically reduced."

},

{

text: "A way to ensure reproducibility, reusability, and fairness.",

explain: "Correct! Sharing the right information in the model card will help users leverage your model and be aware of its limits and biases. ",

correct: true

},

{

text: "A Python file that can be run to retrieve information about the model.",

explain: "Model cards are simple Markdown files."

}

]}

/>

### 5. Which of these objects of the 🤗 Transformers library can be directly shared on the Hub with `push_to_hub()`?

{#if fw === 'pt'}

<Question

choices={[

{

text: "A tokenizer",

explain: "Correct! All tokenizers have the <code>push_to_hub</code> method, and using it will push all the tokenizer files (vocabulary, architecture of the tokenizer, etc.) to a given repo. That's not the only right answer, though!",

correct: true

},

{

text: "A model configuration",

explain: "Right! All model configurations have the <code>push_to_hub</code> method, and using it will push them to a given repo. What else can you share?",

correct: true

},

{

text: "A model",

explain: "Correct! All models have the <code>push_to_hub</code> method, and using it will push them and their configuration files to a given repo. That's not all you can share, though.",

correct: true

},

{

text: "A Trainer",

explain: "That's right — the <code>Trainer</code> also implements the <code>push_to_hub</code> method, and using it will upload the model, its configuration, the tokenizer, and a model card draft to a given repo. Try another answer!",

correct: true

}

]}

/>

{:else}

<Question

choices={[

{

text: "A tokenizer",

explain: "Correct! All tokenizers have the <code>push_to_hub</code> method, and using it will push all the tokenizer files (vocabulary, architecture of the tokenizer, etc.) to a given repo. That's not the only right answer, though!",

correct: true

},

{

text: "A model configuration",

explain: "Right! All model configurations have the <code>push_to_hub</code> method, and using it will push them to a given repo. What else can you share?",

correct: true

},

{

text: "A model",

explain: "Correct! All models have the <code>push_to_hub</code> method, and using it will push them and their configuration files to a given repo. That's not all you can share, though.",

correct: true

},

{

text: "All of the above with a dedicated callback",

explain: "That's right — the <code>PushToHubCallback</code> will regularly send all of those objects to a repo during training.",

correct: true

}

]}

/>

{/if}

### 6. What is the first step when using the `push_to_hub()` method or the CLI tools?

<Question

choices={[

{

text: "Log in on the website.",

explain: "This won't help you on your local machine."

},

{

text: "Run 'huggingface-cli login' in a terminal.",

explain: "Correct — this will download and cache your personal token.",

correct: true

},

{

text: "Run 'notebook_login()' in a notebook.",

explain: "Correct — this will display a widget to let you authenticate.",

correct: true

},

]}

/>

### 7. You're using a model and a tokenizer — how can you upload them to the Hub?

<Question

choices={[

{

text: "By calling the push_to_hub method directly on the model and the tokenizer.",

explain: "Correct!",

correct: true

},

{

text: "Within the Python runtime, by wrapping them in a <code>huggingface_hub</code> utility.",

explain: "Models and tokenizers already benefit from <code>huggingface_hub</code> utilities: no need for additional wrapping!"

},

{

text: "By saving them to disk and calling <code>transformers-cli upload-model</code>",

explain: "The command <code>upload-model</code> does not exist."

}

]}

/>

### 8. Which git operations can you do with the `Repository` class?

<Question

choices={[

{

text: "A commit.",

explain: "Correct, the <code>git_commit()</code> method is there for that.",

correct: true

},

{

text: "A pull",

explain: "That is the purpose of the <code>git_pull()</code> method.",

correct: true

},

{

text: "A push",

explain: "The method <code>git_push()</code> does this.",

correct: true

},

{

text: "A merge",

explain: "No, that operation will never be possible with this API."

}

]}

/>

| huggingface/course/blob/main/chapters/en/chapter4/6.mdx |

Panel on Spaces

[Panel](https://panel.holoviz.org/) is an open-source Python library that lets you easily build powerful tools, dashboards and complex applications entirely in Python. It has a batteries-included philosophy, putting the PyData ecosystem, powerful data tables and much more at your fingertips. High-level reactive APIs and lower-level callback based APIs ensure you can quickly build exploratory applications, but you aren’t limited if you build complex, multi-page apps with rich interactivity. Panel is a member of the [HoloViz](https://holoviz.org/) ecosystem, your gateway into a connected ecosystem of data exploration tools.

Visit [Panel documentation](https://panel.holoviz.org/) to learn more about making powerful applications.

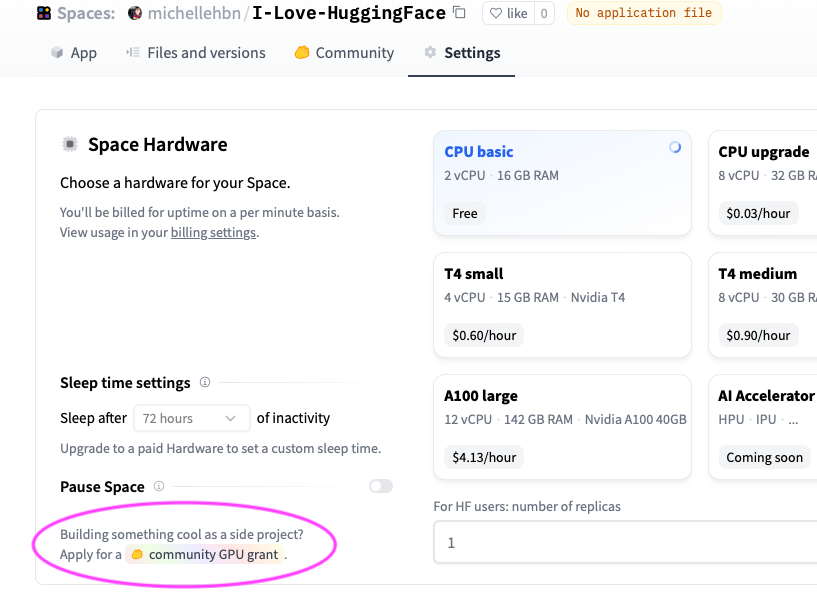

## 🚀 Deploy Panel on Spaces

You can deploy Panel on Spaces with just a few clicks:

<a href="https://huggingface.co/new-space?template=Panel-Org/panel-template"> <img src="https://huggingface.co/datasets/huggingface/badges/resolve/main/deploy-to-spaces-lg.svg"/> </a>

There are a few key parameters you need to define: the Owner (either your personal account or an organization), a Space name, and Visibility. In case you intend to execute computationally intensive deep learning models, consider upgrading to a GPU to boost performance.

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/hub/spaces-panel.png" style="width:70%">

Once you have created the Space, it will start out in “Building” status, which will change to “Running” once your Space is ready to go.

## ⚡️ What will you see?

When your Space is built and ready, you will see this image classification Panel app which will let you fetch a random image and run the OpenAI CLIP classifier model on it. Check out our [blog post](https://blog.holoviz.org/building_an_interactive_ml_dashboard_in_panel.html) for a walkthrough of this app.

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/hub/spaces-panel-demo.gif" style="width:70%">

## 🛠️ How to customize and make your own app?

The Space template will populate a few files to get your app started:

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/hub/spaces-panel-files.png" style="width:70%">

Three files are important:

### 1. app.py

This file defines your Panel application code. You can start by modifying the existing application or replace it entirely to build your own application. To learn more about writing your own Panel app, refer to the [Panel documentation](https://panel.holoviz.org/).

### 2. Dockerfile

The Dockerfile contains a sequence of commands that Docker will execute to construct and launch an image as a container that your Panel app will run in. Typically, to serve a Panel app, we use the command `panel serve app.py`. In this specific file, we divide the command into a list of strings. Furthermore, we must define the address and port because Hugging Face will expect to serve your application on port 7860. Additionally, we need to specify the `allow-websocket-origin` flag to enable the connection to the server's websocket.

### 3. requirements.txt

This file defines the required packages for our Panel app. When using Space, dependencies listed in the requirements.txt file will be automatically installed. You have the freedom to modify this file by removing unnecessary packages or adding additional ones that are required for your application. Feel free to make the necessary changes to ensure your app has the appropriate packages installed.

## 🌐 Join Our Community

The Panel community is vibrant and supportive, with experienced developers and data scientists eager to help and share their knowledge. Join us and connect with us:

- [Discord](https://discord.gg/aRFhC3Dz9w)

- [Discourse](https://discourse.holoviz.org/)

- [Twitter](https://twitter.com/Panel_Org)

- [LinkedIn](https://www.linkedin.com/company/panel-org)

- [Github](https://github.com/holoviz/panel)

| huggingface/hub-docs/blob/main/docs/hub/spaces-sdks-docker-panel.md |

--

title: "Interactively explore your Huggingface dataset with one line of code"

thumbnail: /blog/assets/scalable-data-inspection/thumbnail.png

authors:

- user: sps44

guest: true

- user: druzsan

guest: true

- user: neindochoh

guest: true

- user: MarkusStoll

guest: true

---

# Interactively explore your Huggingface dataset with one line of code

The Hugging Face [*datasets* library](https://huggingface.co/docs/datasets/index) not only provides access to more than 70k publicly available datasets, but also offers very convenient data preparation pipelines for custom datasets.

[Renumics Spotlight](https://github.com/Renumics/spotlight) allows you to create **interactive visualizations** to **identify critical clusters** in your data. Because Spotlight understands the data semantics within Hugging Face datasets, you can **[get started with just one line of code](https://renumics.com/docs)**:

```python

import datasets

from renumics import spotlight

ds = datasets.load_dataset('speech_commands', 'v0.01', split='validation')

spotlight.show(ds)

```

<p align="center"><a href="https://github.com/Renumics/spotlight"><img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/scalable-data-inspection/speech_commands_vis_s.gif" width="100%"/></a></p>

Spotlight allows to **leverage model results** such as predictions and embeddings to gain a deeper understanding in data segments and model failure modes:

```python

ds_results = datasets.load_dataset('renumics/speech_commands-ast-finetuned-results', 'v0.01', split='validation')

ds = datasets.concatenate_datasets([ds, ds_results], axis=1)

spotlight.show(ds, dtype={'embedding': spotlight.Embedding}, layout=spotlight.layouts.debug_classification(embedding='embedding', inspect={'audio': spotlight.dtypes.audio_dtype}))

```

Data inspection is a very important task in almost all ML development stages, but it can also be very time consuming.

> “Manual inspection of data has probably the highest value-to-prestige ratio of any activity in machine learning.” — Greg Brockman

>

[Spotlight](https://renumics.com/docs) helps you to **make data inspection more scalable** along two dimensions: Setting up and maintaining custom data inspection workflows and finding relevant data samples and clusters to inspect. In the following sections we show some examples based on Hugging Face datasets.

## Spotlight 🤝 Hugging Face datasets

The *datasets* library has several features that makes it an ideal tool for working with ML datasets: It stores tabular data (e.g. metadata, labels) along with unstructured data (e.g. images, audio) in a common Arrows table. *Datasets* also describes important data semantics through features (e.g. images, audio) and additional task-specific metadata.

Spotlight directly works on top of the *datasets* library. This means that there is no need to copy or pre-process the dataset for data visualization and inspection. Spotlight loads the tabular data into memory to allow for efficient, client-side data analytics. Memory-intensive unstructured data samples (e.g. audio, images, video) are loaded lazily on demand. In most cases, data types and label mappings are inferred directly from the dataset. Here, we visualize the CIFAR-100 dataset with one line of code:

```python

ds = datasets.load_dataset('cifar100', split='test')

spotlight.show(ds)

```

In cases where the data types are ambiguous or not specified, the Spotlight API allows to manually assign them:

```python

label_mapping = dict(zip(ds.features['fine_label'].names, range(len(ds.features['fine_label'].names))))

spotlight.show(ds, dtype={'img': spotlight.Image, 'fine_label': spotlight.dtypes.CategoryDType(categories=label_mapping)})

```

## **Leveraging model results for data inspection**

Exploring raw unstructured datasets often yield little insights. Leveraging model results such as predictions or embeddings can help to uncover critical data samples and clusters. Spotlight has several visualization options (e.g. similarity map, confusion matrix) that specifically make use of model results.

We recommend storing your prediction results directly in a Hugging Face dataset. This not only allows you to take advantage of the batch processing capabilities of the datasets library, but also keeps label mappings.

We can use the [*transformers* library](https://huggingface.co/docs/transformers) to compute embeddings and predictions on the CIFAR-100 image classification problem. We install the libraries via pip:

```bash

pip install renumics-spotlight datasets transformers[torch]

```

Now we can compute the enrichment:

```python

import torch

import transformers

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

model_name = "Ahmed9275/Vit-Cifar100"

processor = transformers.ViTImageProcessor.from_pretrained(model_name)

cls_model = transformers.ViTForImageClassification.from_pretrained(model_name).to(device)

fe_model = transformers.ViTModel.from_pretrained(model_name).to(device)

def infer(batch):

images = [image.convert("RGB") for image in batch]

inputs = processor(images=images, return_tensors="pt").to(device)

with torch.no_grad():

outputs = cls_model(**inputs)

probs = torch.nn.functional.softmax(outputs.logits, dim=-1).cpu().numpy()

embeddings = fe_model(**inputs).last_hidden_state[:, 0].cpu().numpy()

preds = probs.argmax(axis=-1)

return {"prediction": preds, "embedding": embeddings}

features = datasets.Features({**ds.features, "prediction": ds.features["fine_label"], "embedding": datasets.Sequence(feature=datasets.Value("float32"), length=768)})

ds_enriched = ds.map(infer, input_columns="img", batched=True, batch_size=2, features=features)

```

If you don’t want to perform the full inference run, you can alternatively download pre-computed model results for CIFAR-100 to follow this tutorial:

```python

ds_results = datasets.load_dataset('renumics/spotlight-cifar100-enrichment', split='test')

ds_enriched = datasets.concatenate_datasets([ds, ds_results], axis=1)

```

We can now use the results to interactively explore relevant data samples and clusters in Spotlight:

```python

layout = spotlight.layouts.debug_classification(label='fine_label', embedding='embedding', inspect={'img': spotlight.dtypes.image_dtype})

spotlight.show(ds_enriched, dtype={'embedding': spotlight.Embedding}, layout=layout)

```

<figure class="image text-center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/scalable-data-inspection/cifar-100-model-debugging.png" alt="CIFAR-100 model debugging layout example.">

</figure>

## Customizing data inspection workflows

Visualization layouts can be interactively changed, saved and loaded in the GUI: You can select different widget types and configurations. The *Inspector* widget allows to represent multimodal data samples including text, image, audio, video and time series data.

You can also define layouts through the [Python API](https://renumics.com/api/spotlight/). This option is especially useful for building custom data inspection and curation workflows including EDA, model debugging and model monitoring tasks.

In combination with the data issues widget, the Python API offers a great way to integrate the results of existing scripts (e.g. data quality checks or model monitoring) into a scalable data inspection workflow.

## Using Spotlight on the Hugging Face hub

You can use Spotlight directly on your local NLP, audio, CV or multimodal dataset. If you would like to showcase your dataset or model results on the Hugging Face hub, you can use Hugging Face spaces to launch a Spotlight visualization for it.

We have already prepared [example spaces](https://huggingface.co/renumics) for many popular NLP, audio and CV datasets on the hub. You can simply duplicate one of these spaces and specify your dataset in the `HF_DATASET` variable.

You can optionally choose a dataset that contains model results and other configuration options such as splits, subsets or dataset revisions.

<figure class="image text-center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/scalable-data-inspection/space_duplication.png" alt="Creating a new dataset visualization with Spotlight by duplicating a Hugging Face space.">

</figure>

## What’s next?

With Spotlight you can create **interactive visualizations** and leverage data enrichments to **identify critical clusters** in your Hugging Face datasets. In this blog, we have seen both an audio ML and a computer vision example.

You can use Spotlight directly to explore and curate your NLP, audio, CV or multimodal dataset:

- Install Spotlight: *pip install renumics-spotlight*

- Check out the [documentation](https://renumics.com/docs) or open an issue on [Github](https://github.com/Renumics/spotlight)

- Join the [Spotlight community](https://discord.gg/VAQdFCU5YD) on Discord

- Follow us on [Twitter](https://twitter.com/renumics) and [LinkedIn](https://www.linkedin.com/company/renumics) | huggingface/blog/blob/main/scalable-data-inspection.md |

DeeBERT: Early Exiting for *BERT

This is the code base for the paper [DeeBERT: Dynamic Early Exiting for Accelerating BERT Inference](https://www.aclweb.org/anthology/2020.acl-main.204/), modified from its [original code base](https://github.com/castorini/deebert).

The original code base also has information for downloading sample models that we have trained in advance.

## Usage

There are three scripts in the folder which can be run directly.

In each script, there are several things to modify before running:

* `PATH_TO_DATA`: path to the GLUE dataset.

* `--output_dir`: path for saving fine-tuned models. Default: `./saved_models`.

* `--plot_data_dir`: path for saving evaluation results. Default: `./results`. Results are printed to stdout and also saved to `npy` files in this directory to facilitate plotting figures and further analyses.

* `MODEL_TYPE`: bert or roberta

* `MODEL_SIZE`: base or large

* `DATASET`: SST-2, MRPC, RTE, QNLI, QQP, or MNLI

#### train_deebert.sh

This is for fine-tuning DeeBERT models.

#### eval_deebert.sh

This is for evaluating each exit layer for fine-tuned DeeBERT models.

#### entropy_eval.sh

This is for evaluating fine-tuned DeeBERT models, given a number of different early exit entropy thresholds.

## Citation

Please cite our paper if you find the resource useful:

```

@inproceedings{xin-etal-2020-deebert,

title = "{D}ee{BERT}: Dynamic Early Exiting for Accelerating {BERT} Inference",

author = "Xin, Ji and

Tang, Raphael and

Lee, Jaejun and

Yu, Yaoliang and

Lin, Jimmy",

booktitle = "Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics",

month = jul,

year = "2020",

address = "Online",

publisher = "Association for Computational Linguistics",

url = "https://www.aclweb.org/anthology/2020.acl-main.204",

pages = "2246--2251",

}

```

| huggingface/transformers/blob/main/examples/research_projects/deebert/README.md |

!--Copyright 2023 The HuggingFace Team. All rights reserved.

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

specific language governing permissions and limitations under the License.

⚠️ Note that this file is in Markdown but contain specific syntax for our doc-builder (similar to MDX) that may not be

rendered properly in your Markdown viewer.

-->

# Multitask Prompt Tuning

[Multitask Prompt Tuning](https://huggingface.co/papers/2303.02861) decomposes the soft prompts of each task into a single learned transferable prompt instead of a separate prompt for each task. The single learned prompt can be adapted for each task by multiplicative low rank updates.

The abstract from the paper is:

*Prompt tuning, in which a base pretrained model is adapted to each task via conditioning on learned prompt vectors, has emerged as a promising approach for efficiently adapting large language models to multiple downstream tasks. However, existing methods typically learn soft prompt vectors from scratch, and it has not been clear how to exploit the rich cross-task knowledge with prompt vectors in a multitask learning setting. We propose multitask prompt tuning (MPT), which first learns a single transferable prompt by distilling knowledge from multiple task-specific source prompts. We then learn multiplicative low rank updates to this shared prompt to efficiently adapt it to each downstream target task. Extensive experiments on 23 NLP datasets demonstrate that our proposed approach outperforms the state-of-the-art methods, including the full finetuning baseline in some cases, despite only tuning 0.035% as many task-specific parameters*.

## MultitaskPromptTuningConfig

[[autodoc]] tuners.multitask_prompt_tuning.config.MultitaskPromptTuningConfig

## MultitaskPromptEmbedding

[[autodoc]] tuners.multitask_prompt_tuning.model.MultitaskPromptEmbedding | huggingface/peft/blob/main/docs/source/package_reference/multitask_prompt_tuning.md |

(Tensorflow) EfficientNet Lite

**EfficientNet** is a convolutional neural network architecture and scaling method that uniformly scales all dimensions of depth/width/resolution using a *compound coefficient*. Unlike conventional practice that arbitrary scales these factors, the EfficientNet scaling method uniformly scales network width, depth, and resolution with a set of fixed scaling coefficients. For example, if we want to use \\( 2^N \\) times more computational resources, then we can simply increase the network depth by \\( \alpha ^ N \\), width by \\( \beta ^ N \\), and image size by \\( \gamma ^ N \\), where \\( \alpha, \beta, \gamma \\) are constant coefficients determined by a small grid search on the original small model. EfficientNet uses a compound coefficient \\( \phi \\) to uniformly scales network width, depth, and resolution in a principled way.

The compound scaling method is justified by the intuition that if the input image is bigger, then the network needs more layers to increase the receptive field and more channels to capture more fine-grained patterns on the bigger image.

The base EfficientNet-B0 network is based on the inverted bottleneck residual blocks of [MobileNetV2](https://paperswithcode.com/method/mobilenetv2).

EfficientNet-Lite makes EfficientNet more suitable for mobile devices by introducing [ReLU6](https://paperswithcode.com/method/relu6) activation functions and removing [squeeze-and-excitation blocks](https://paperswithcode.com/method/squeeze-and-excitation).

The weights from this model were ported from [Tensorflow/TPU](https://github.com/tensorflow/tpu).

## How do I use this model on an image?

To load a pretrained model:

```py

>>> import timm

>>> model = timm.create_model('tf_efficientnet_lite0', pretrained=True)

>>> model.eval()

```

To load and preprocess the image:

```py

>>> import urllib

>>> from PIL import Image

>>> from timm.data import resolve_data_config

>>> from timm.data.transforms_factory import create_transform

>>> config = resolve_data_config({}, model=model)

>>> transform = create_transform(**config)

>>> url, filename = ("https://github.com/pytorch/hub/raw/master/images/dog.jpg", "dog.jpg")

>>> urllib.request.urlretrieve(url, filename)

>>> img = Image.open(filename).convert('RGB')

>>> tensor = transform(img).unsqueeze(0) # transform and add batch dimension

```

To get the model predictions:

```py

>>> import torch

>>> with torch.no_grad():

... out = model(tensor)

>>> probabilities = torch.nn.functional.softmax(out[0], dim=0)

>>> print(probabilities.shape)

>>> # prints: torch.Size([1000])

```

To get the top-5 predictions class names:

```py

>>> # Get imagenet class mappings

>>> url, filename = ("https://raw.githubusercontent.com/pytorch/hub/master/imagenet_classes.txt", "imagenet_classes.txt")

>>> urllib.request.urlretrieve(url, filename)

>>> with open("imagenet_classes.txt", "r") as f:

... categories = [s.strip() for s in f.readlines()]

>>> # Print top categories per image

>>> top5_prob, top5_catid = torch.topk(probabilities, 5)

>>> for i in range(top5_prob.size(0)):

... print(categories[top5_catid[i]], top5_prob[i].item())

>>> # prints class names and probabilities like:

>>> # [('Samoyed', 0.6425196528434753), ('Pomeranian', 0.04062102362513542), ('keeshond', 0.03186424449086189), ('white wolf', 0.01739676296710968), ('Eskimo dog', 0.011717947199940681)]

```

Replace the model name with the variant you want to use, e.g. `tf_efficientnet_lite0`. You can find the IDs in the model summaries at the top of this page.

To extract image features with this model, follow the [timm feature extraction examples](../feature_extraction), just change the name of the model you want to use.

## How do I finetune this model?

You can finetune any of the pre-trained models just by changing the classifier (the last layer).

```py

>>> model = timm.create_model('tf_efficientnet_lite0', pretrained=True, num_classes=NUM_FINETUNE_CLASSES)

```

To finetune on your own dataset, you have to write a training loop or adapt [timm's training

script](https://github.com/rwightman/pytorch-image-models/blob/master/train.py) to use your dataset.

## How do I train this model?

You can follow the [timm recipe scripts](../scripts) for training a new model afresh.

## Citation

```BibTeX

@misc{tan2020efficientnet,

title={EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks},

author={Mingxing Tan and Quoc V. Le},

year={2020},

eprint={1905.11946},

archivePrefix={arXiv},

primaryClass={cs.LG}

}

```

<!--

Type: model-index

Collections:

- Name: TF EfficientNet Lite

Paper:

Title: 'EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks'

URL: https://paperswithcode.com/paper/efficientnet-rethinking-model-scaling-for

Models:

- Name: tf_efficientnet_lite0

In Collection: TF EfficientNet Lite

Metadata:

FLOPs: 488052032

Parameters: 4650000

File Size: 18820223

Architecture:

- 1x1 Convolution

- Average Pooling

- Batch Normalization

- Convolution

- Dense Connections

- Dropout

- Inverted Residual Block

- RELU6

Tasks:

- Image Classification

Training Data:

- ImageNet

ID: tf_efficientnet_lite0

Crop Pct: '0.875'

Image Size: '224'

Interpolation: bicubic

Code: https://github.com/rwightman/pytorch-image-models/blob/9a25fdf3ad0414b4d66da443fe60ae0aa14edc84/timm/models/efficientnet.py#L1596

Weights: https://github.com/rwightman/pytorch-image-models/releases/download/v0.1-weights/tf_efficientnet_lite0-0aa007d2.pth

Results:

- Task: Image Classification

Dataset: ImageNet

Metrics:

Top 1 Accuracy: 74.83%

Top 5 Accuracy: 92.17%

- Name: tf_efficientnet_lite1

In Collection: TF EfficientNet Lite

Metadata:

FLOPs: 773639520

Parameters: 5420000

File Size: 21939331

Architecture:

- 1x1 Convolution

- Average Pooling

- Batch Normalization

- Convolution

- Dense Connections

- Dropout

- Inverted Residual Block

- RELU6

Tasks:

- Image Classification

Training Data:

- ImageNet

ID: tf_efficientnet_lite1

Crop Pct: '0.882'

Image Size: '240'

Interpolation: bicubic

Code: https://github.com/rwightman/pytorch-image-models/blob/9a25fdf3ad0414b4d66da443fe60ae0aa14edc84/timm/models/efficientnet.py#L1607

Weights: https://github.com/rwightman/pytorch-image-models/releases/download/v0.1-weights/tf_efficientnet_lite1-bde8b488.pth

Results:

- Task: Image Classification

Dataset: ImageNet

Metrics:

Top 1 Accuracy: 76.67%

Top 5 Accuracy: 93.24%

- Name: tf_efficientnet_lite2

In Collection: TF EfficientNet Lite

Metadata:

FLOPs: 1068494432

Parameters: 6090000

File Size: 24658687

Architecture:

- 1x1 Convolution

- Average Pooling

- Batch Normalization

- Convolution

- Dense Connections

- Dropout

- Inverted Residual Block

- RELU6

Tasks:

- Image Classification

Training Data:

- ImageNet

ID: tf_efficientnet_lite2

Crop Pct: '0.89'

Image Size: '260'

Interpolation: bicubic

Code: https://github.com/rwightman/pytorch-image-models/blob/9a25fdf3ad0414b4d66da443fe60ae0aa14edc84/timm/models/efficientnet.py#L1618

Weights: https://github.com/rwightman/pytorch-image-models/releases/download/v0.1-weights/tf_efficientnet_lite2-dcccb7df.pth

Results:

- Task: Image Classification

Dataset: ImageNet

Metrics:

Top 1 Accuracy: 77.48%

Top 5 Accuracy: 93.75%

- Name: tf_efficientnet_lite3

In Collection: TF EfficientNet Lite

Metadata:

FLOPs: 2011534304

Parameters: 8199999

File Size: 33161413

Architecture:

- 1x1 Convolution

- Average Pooling

- Batch Normalization

- Convolution

- Dense Connections

- Dropout

- Inverted Residual Block

- RELU6

Tasks:

- Image Classification

Training Data:

- ImageNet

ID: tf_efficientnet_lite3

Crop Pct: '0.904'

Image Size: '300'

Interpolation: bilinear

Code: https://github.com/rwightman/pytorch-image-models/blob/9a25fdf3ad0414b4d66da443fe60ae0aa14edc84/timm/models/efficientnet.py#L1629

Weights: https://github.com/rwightman/pytorch-image-models/releases/download/v0.1-weights/tf_efficientnet_lite3-b733e338.pth

Results:

- Task: Image Classification

Dataset: ImageNet

Metrics:

Top 1 Accuracy: 79.83%

Top 5 Accuracy: 94.91%

- Name: tf_efficientnet_lite4

In Collection: TF EfficientNet Lite

Metadata:

FLOPs: 5164802912

Parameters: 13010000

File Size: 52558819

Architecture:

- 1x1 Convolution

- Average Pooling

- Batch Normalization

- Convolution

- Dense Connections

- Dropout

- Inverted Residual Block

- RELU6

Tasks:

- Image Classification

Training Data:

- ImageNet

ID: tf_efficientnet_lite4

Crop Pct: '0.92'

Image Size: '380'

Interpolation: bilinear

Code: https://github.com/rwightman/pytorch-image-models/blob/9a25fdf3ad0414b4d66da443fe60ae0aa14edc84/timm/models/efficientnet.py#L1640

Weights: https://github.com/rwightman/pytorch-image-models/releases/download/v0.1-weights/tf_efficientnet_lite4-741542c3.pth

Results:

- Task: Image Classification

Dataset: ImageNet

Metrics:

Top 1 Accuracy: 81.54%

Top 5 Accuracy: 95.66%

-->

| huggingface/pytorch-image-models/blob/main/hfdocs/source/models/tf-efficientnet-lite.mdx |

User Studies

## Model Card Audiences and Use Cases

During our investigation into the landscape of model documentation tools (data cards etc), we noted how different stakeholders make use of existing infrastructure to create a kind of model card with information focused on their needed domain.

One such example are ‘business analysts’ or those whose focus is on B2B as well as an internal only audience.The static and more manual approach for this audience is using Confluence pages. (*if PMs write the page, we are detaching the model creators from its theoretical consumption; if ML engineers write the page, they may tend to stress only a certain type of information.* [^1]) or a proposed combination of HTML (Jinja) templates, Metaflow classes and external APi keys, in order to create model cards that include a perspective of the model information that is needed for their domain/use case.

We conducted a user study, with the aim of validating a literature informed model card structure and to understand sections/ areas of ranked importance for the different stakeholders perspectives. The study aimed to validate the following components:

* **Model Card Layout**

During our examination of the state of the art of model cards, which noted recurring sections from the top ~100 downloaded models on the hub that had model cards. From this analysis we catalogued the top recurring model card sections and recurring information, this coupled with the structure of the Bloom model card, lead us to the initial version of a standard model card structure.

As we began to structure our user studies, two variations of model cards - that made use of the [initial model card structure](./model-card-annotated) - were used as interactive demonstrations. The aim of these demo’s was to understand not only the different user perspectives on the visual elements of the model card’s but also the content presented to users. The {desired} outcome would enable us to further understand what makes a model card both easier to read, still providing some level of interactivity within the model cards, all while presenting the information in an easily understandable [approachable] manner.

* **Stakeholder Perspectives**

As different people, of varying technical backgrounds, could be collaborating on a model and subsequently the model card, we sought to validate the need for different stakeholders perspectives. Based on the ease of use of writing the different model card sections and the sections that one would read first

Participants ranked the different sections of model cards in the perspective of one reading a model card and then as an author of a model card. An ordering scheme - 1 being the highest weight and 10 being the lowest - was applied to the different sections that the user would usually read first in a model card and the sections of a model card that a model card author would find easiest to write.

## Summary of Responses to the User Studies Survey

Our user studies provided further clarity on the sections that different user profiles/stakeholders would find more challenging or easier to write.

The results illustrated below show that while the Bias, Risks and Limitations section ranks second for both model card writers and model card readers for *In what order do you write the model card and What section do you look at first*, respectively, it is also noted as the most challenging/longest section to write. This favoured/endorsed the need to further evaluate the Bias, Risks and Limitations sections in order to assist with writing this decisive/imperative section.

These templates were then used to generate model cards for the top 200 most downloaded Hugging Face (HF) models.

* We first began by pulling all Hugging Face model's on the hub and, in particular, subsections on Limitations and Bias ("Risks" subsections were largely not present).

* Based on inputs that were the most continuously used with a higher number of model downloads, grouped by model typed, the tool provides prompted text within the Bias, Risks and Limitations sections. We also prompt a default text if the model type is not specified.

Using this information, we returned back to our analysis of all model cards on the hub, coupled with suggestions from other researchers and peers at HF and additional research on the type of prompted information we could provide to users while they are creating model cards. These defaulted prompted text allowed us to satisfy the aims:

1) For those who have not created model cards before or who do not usually make a model card or any other type of model documentation for their model’s, the prompted text enables these users to easily create a model card. This in turn increased the number of model cards created.

2) Users who already write model cards, the prompted text invites them to add more to their model card, further developing the content/standard of model cards.

## User Study Details

We selected people from a variety of different backgrounds relevant to machine learning and model documentation. Below, we detail their demographics, the questions they were asked, and the corresponding insights from their responses. Full details on responses are available in [Appendix A](./model-card-appendix#appendix-a-user-study).

### Respondent Demographics

* Tech & Regulatory Affairs Counsel

* ML Engineer (x2)

* Developer Advocate

* Executive Assistant

* Monetization Lead

* Policy Manager/AI Researcher

* Research Intern

**What are the key pieces of information you want or need to know about a model when interacting with a machine learning model?**

**Insight:**

* Respondents prioritised information about the model task/domain (x3), training data/training procedure (x2), how to use the model (with code) (x2), bias and limitations, and the model licence

### Feedback on Specific Model Card Formats

#### Format 1:

**Current [distilgpt2 model card](https://huggingface.co/distilgpt2) on the Hub**

**Insights:**

* Respondents found this model card format to be concise, complete, and readable.

* There was no consensus about the collapsible sections (some liked them and wanted more, some disliked them).

* Some respondents said “Risks and Limitations” should go with “Out of Scope Uses”

#### Format 2:

**Nazneen Rajani's [Interactive Model Card space](https://huggingface.co/spaces/nazneen/interactive-model-cards)**

**Insights:**

* While a few respondents really liked this format, most found it overwhelming or as an overload of information. Several suggested this could be a nice tool to layer onto a base model card for more advanced audiences.

#### Format 3:

**Ezi Ozoani's [Semi-Interactive Model Card Space](https://huggingface.co/spaces/Ezi/ModelCardsAnalysis)**

**Insights:**

* Several respondents found this format overwhelming, but they generally found it less overwhelming than format 2.

* Several respondents disagreed with the current layout and gave specific feedback about which sections should be prioritised within each column.

### Section Rankings

*Ordered based on average ranking. Arrows are shown relative to the order of the associated section in the question on the survey.*

**Insights:**

* When writing model cards, respondents generally said they would write a model card in the same order in which the sections were listed in the survey question.

* When ranking the sections of the model card by ease/quickness of writing, consensus was that the sections on uses and limitations and risks were the most difficult.

* When reading model cards, respondents said they looked at the cards’ sections in an order that was close to – but not perfectly aligned with – the order in which the sections were listed in the survey question.

.png)

.png)

<Tip>

[Checkout the Appendix](./model-card-appendix)

</Tip>

Acknowledgements

================

We want to acknowledge and thank [Bibi Ofuya](https://www.figma.com/proto/qrPCjWfFz5HEpWqQ0PJSWW/Bibi's-Portfolio?page-id=0%3A1&node-id=1%3A28&viewport=243%2C48%2C0.2&scaling=min-zoom&starting-point-node-id=1%3A28) for her question creation and her guidance on user-focused ordering and presentation during the user studies.

[^1]: See https://towardsdatascience.com/dag-card-is-the-new-model-card-70754847a111

| huggingface/hub-docs/blob/main/docs/hub/model-cards-user-studies.md |

!--Copyright 2023 The HuggingFace Team. All rights reserved.

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

specific language governing permissions and limitations under the License.

-->

# Evaluating Diffusion Models

<a target="_blank" href="https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/evaluation.ipynb">

<img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"/>

</a>

Evaluation of generative models like [Stable Diffusion](https://huggingface.co/docs/diffusers/stable_diffusion) is subjective in nature. But as practitioners and researchers, we often have to make careful choices amongst many different possibilities. So, when working with different generative models (like GANs, Diffusion, etc.), how do we choose one over the other?

Qualitative evaluation of such models can be error-prone and might incorrectly influence a decision.

However, quantitative metrics don't necessarily correspond to image quality. So, usually, a combination

of both qualitative and quantitative evaluations provides a stronger signal when choosing one model

over the other.

In this document, we provide a non-exhaustive overview of qualitative and quantitative methods to evaluate Diffusion models. For quantitative methods, we specifically focus on how to implement them alongside `diffusers`.

The methods shown in this document can also be used to evaluate different [noise schedulers](https://huggingface.co/docs/diffusers/main/en/api/schedulers/overview) keeping the underlying generation model fixed.

## Scenarios

We cover Diffusion models with the following pipelines:

- Text-guided image generation (such as the [`StableDiffusionPipeline`](https://huggingface.co/docs/diffusers/main/en/api/pipelines/stable_diffusion/text2img)).

- Text-guided image generation, additionally conditioned on an input image (such as the [`StableDiffusionImg2ImgPipeline`](https://huggingface.co/docs/diffusers/main/en/api/pipelines/stable_diffusion/img2img) and [`StableDiffusionInstructPix2PixPipeline`](https://huggingface.co/docs/diffusers/main/en/api/pipelines/pix2pix)).

- Class-conditioned image generation models (such as the [`DiTPipeline`](https://huggingface.co/docs/diffusers/main/en/api/pipelines/dit)).

## Qualitative Evaluation

Qualitative evaluation typically involves human assessment of generated images. Quality is measured across aspects such as compositionality, image-text alignment, and spatial relations. Common prompts provide a degree of uniformity for subjective metrics.

DrawBench and PartiPrompts are prompt datasets used for qualitative benchmarking. DrawBench and PartiPrompts were introduced by [Imagen](https://imagen.research.google/) and [Parti](https://parti.research.google/) respectively.

From the [official Parti website](https://parti.research.google/):

> PartiPrompts (P2) is a rich set of over 1600 prompts in English that we release as part of this work. P2 can be used to measure model capabilities across various categories and challenge aspects.

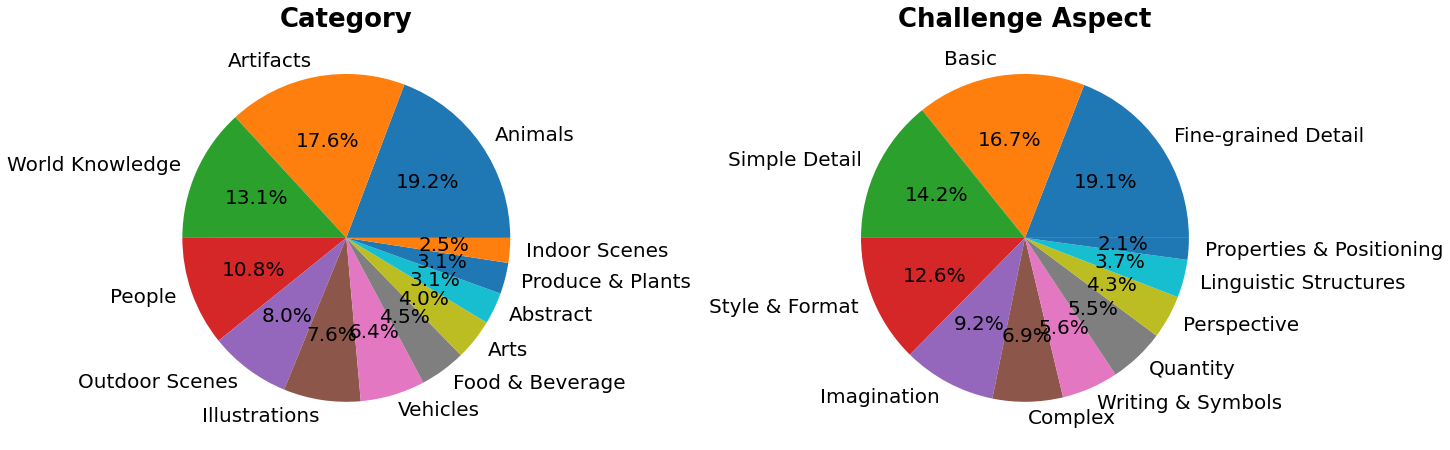

PartiPrompts has the following columns:

- Prompt

- Category of the prompt (such as “Abstract”, “World Knowledge”, etc.)

- Challenge reflecting the difficulty (such as “Basic”, “Complex”, “Writing & Symbols”, etc.)

These benchmarks allow for side-by-side human evaluation of different image generation models.

For this, the 🧨 Diffusers team has built **Open Parti Prompts**, which is a community-driven qualitative benchmark based on Parti Prompts to compare state-of-the-art open-source diffusion models:

- [Open Parti Prompts Game](https://huggingface.co/spaces/OpenGenAI/open-parti-prompts): For 10 parti prompts, 4 generated images are shown and the user selects the image that suits the prompt best.

- [Open Parti Prompts Leaderboard](https://huggingface.co/spaces/OpenGenAI/parti-prompts-leaderboard): The leaderboard comparing the currently best open-sourced diffusion models to each other.

To manually compare images, let’s see how we can use `diffusers` on a couple of PartiPrompts.

Below we show some prompts sampled across different challenges: Basic, Complex, Linguistic Structures, Imagination, and Writing & Symbols. Here we are using PartiPrompts as a [dataset](https://huggingface.co/datasets/nateraw/parti-prompts).

```python

from datasets import load_dataset

# prompts = load_dataset("nateraw/parti-prompts", split="train")

# prompts = prompts.shuffle()

# sample_prompts = [prompts[i]["Prompt"] for i in range(5)]

# Fixing these sample prompts in the interest of reproducibility.

sample_prompts = [

"a corgi",

"a hot air balloon with a yin-yang symbol, with the moon visible in the daytime sky",

"a car with no windows",

"a cube made of porcupine",

'The saying "BE EXCELLENT TO EACH OTHER" written on a red brick wall with a graffiti image of a green alien wearing a tuxedo. A yellow fire hydrant is on a sidewalk in the foreground.',

]

```

Now we can use these prompts to generate some images using Stable Diffusion ([v1-4 checkpoint](https://huggingface.co/CompVis/stable-diffusion-v1-4)):

```python

import torch

seed = 0

generator = torch.manual_seed(seed)

images = sd_pipeline(sample_prompts, num_images_per_prompt=1, generator=generator).images

```

We can also set `num_images_per_prompt` accordingly to compare different images for the same prompt. Running the same pipeline but with a different checkpoint ([v1-5](https://huggingface.co/runwayml/stable-diffusion-v1-5)), yields:

Once several images are generated from all the prompts using multiple models (under evaluation), these results are presented to human evaluators for scoring. For

more details on the DrawBench and PartiPrompts benchmarks, refer to their respective papers.

<Tip>

It is useful to look at some inference samples while a model is training to measure the

training progress. In our [training scripts](https://github.com/huggingface/diffusers/tree/main/examples/), we support this utility with additional support for

logging to TensorBoard and Weights & Biases.

</Tip>

## Quantitative Evaluation

In this section, we will walk you through how to evaluate three different diffusion pipelines using:

- CLIP score

- CLIP directional similarity

- FID

### Text-guided image generation

[CLIP score](https://arxiv.org/abs/2104.08718) measures the compatibility of image-caption pairs. Higher CLIP scores imply higher compatibility 🔼. The CLIP score is a quantitative measurement of the qualitative concept "compatibility". Image-caption pair compatibility can also be thought of as the semantic similarity between the image and the caption. CLIP score was found to have high correlation with human judgement.

Let's first load a [`StableDiffusionPipeline`]:

```python

from diffusers import StableDiffusionPipeline

import torch

model_ckpt = "CompVis/stable-diffusion-v1-4"

sd_pipeline = StableDiffusionPipeline.from_pretrained(model_ckpt, torch_dtype=torch.float16).to("cuda")

```

Generate some images with multiple prompts:

```python

prompts = [

"a photo of an astronaut riding a horse on mars",

"A high tech solarpunk utopia in the Amazon rainforest",

"A pikachu fine dining with a view to the Eiffel Tower",

"A mecha robot in a favela in expressionist style",

"an insect robot preparing a delicious meal",

"A small cabin on top of a snowy mountain in the style of Disney, artstation",

]

images = sd_pipeline(prompts, num_images_per_prompt=1, output_type="np").images

print(images.shape)

# (6, 512, 512, 3)

```

And then, we calculate the CLIP score.

```python

from torchmetrics.functional.multimodal import clip_score

from functools import partial

clip_score_fn = partial(clip_score, model_name_or_path="openai/clip-vit-base-patch16")

def calculate_clip_score(images, prompts):

images_int = (images * 255).astype("uint8")

clip_score = clip_score_fn(torch.from_numpy(images_int).permute(0, 3, 1, 2), prompts).detach()

return round(float(clip_score), 4)

sd_clip_score = calculate_clip_score(images, prompts)

print(f"CLIP score: {sd_clip_score}")

# CLIP score: 35.7038

```

In the above example, we generated one image per prompt. If we generated multiple images per prompt, we would have to take the average score from the generated images per prompt.

Now, if we wanted to compare two checkpoints compatible with the [`StableDiffusionPipeline`] we should pass a generator while calling the pipeline. First, we generate images with a

fixed seed with the [v1-4 Stable Diffusion checkpoint](https://huggingface.co/CompVis/stable-diffusion-v1-4):

```python

seed = 0

generator = torch.manual_seed(seed)

images = sd_pipeline(prompts, num_images_per_prompt=1, generator=generator, output_type="np").images

```

Then we load the [v1-5 checkpoint](https://huggingface.co/runwayml/stable-diffusion-v1-5) to generate images:

```python

model_ckpt_1_5 = "runwayml/stable-diffusion-v1-5"

sd_pipeline_1_5 = StableDiffusionPipeline.from_pretrained(model_ckpt_1_5, torch_dtype=weight_dtype).to(device)

images_1_5 = sd_pipeline_1_5(prompts, num_images_per_prompt=1, generator=generator, output_type="np").images

```

And finally, we compare their CLIP scores:

```python

sd_clip_score_1_4 = calculate_clip_score(images, prompts)

print(f"CLIP Score with v-1-4: {sd_clip_score_1_4}")

# CLIP Score with v-1-4: 34.9102

sd_clip_score_1_5 = calculate_clip_score(images_1_5, prompts)

print(f"CLIP Score with v-1-5: {sd_clip_score_1_5}")

# CLIP Score with v-1-5: 36.2137

```

It seems like the [v1-5](https://huggingface.co/runwayml/stable-diffusion-v1-5) checkpoint performs better than its predecessor. Note, however, that the number of prompts we used to compute the CLIP scores is quite low. For a more practical evaluation, this number should be way higher, and the prompts should be diverse.

<Tip warning={true}>

By construction, there are some limitations in this score. The captions in the training dataset

were crawled from the web and extracted from `alt` and similar tags associated an image on the internet.

They are not necessarily representative of what a human being would use to describe an image. Hence we

had to "engineer" some prompts here.

</Tip>

### Image-conditioned text-to-image generation

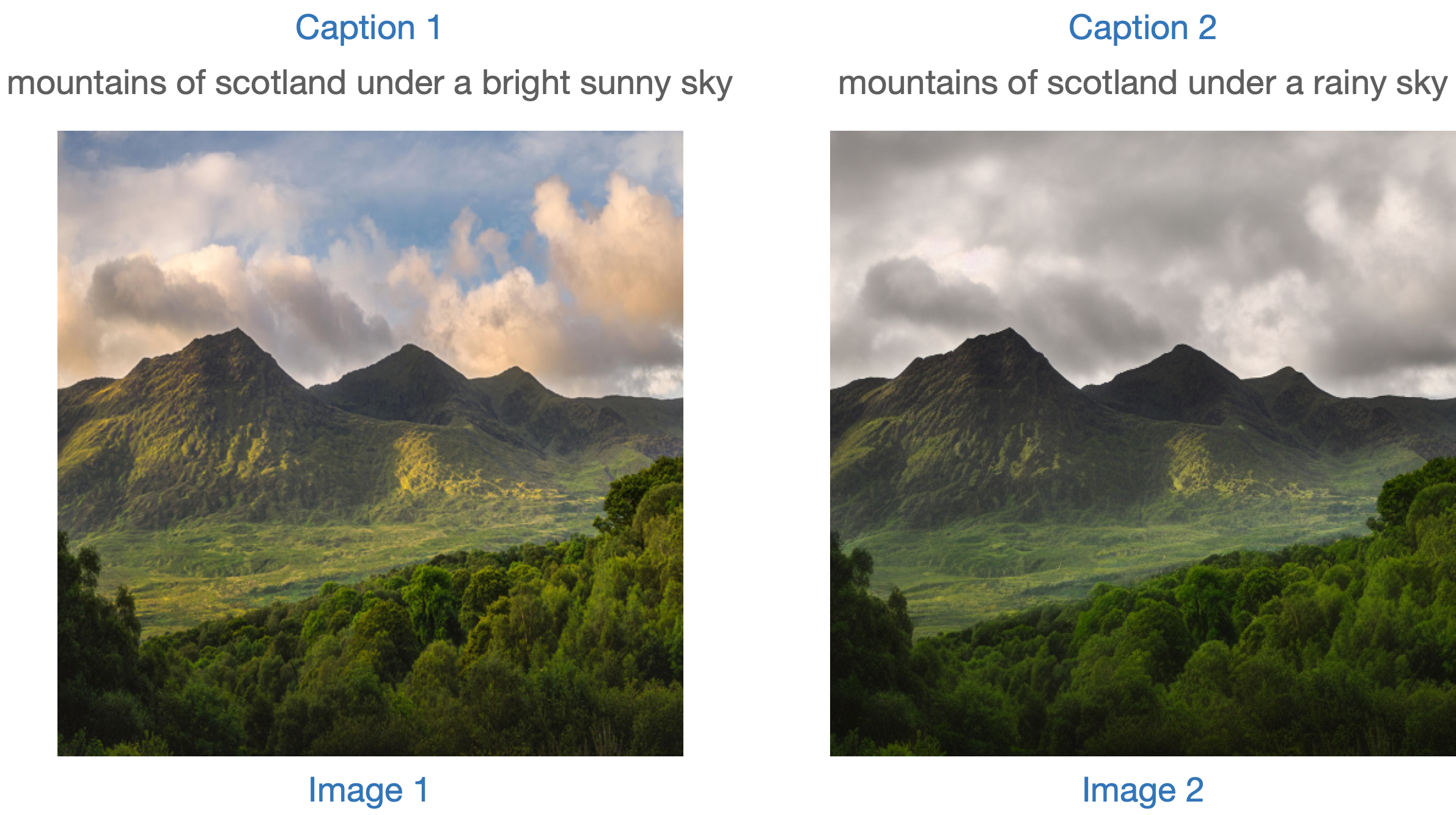

In this case, we condition the generation pipeline with an input image as well as a text prompt. Let's take the [`StableDiffusionInstructPix2PixPipeline`], as an example. It takes an edit instruction as an input prompt and an input image to be edited.

Here is one example:

One strategy to evaluate such a model is to measure the consistency of the change between the two images (in [CLIP](https://huggingface.co/docs/transformers/model_doc/clip) space) with the change between the two image captions (as shown in [CLIP-Guided Domain Adaptation of Image Generators](https://arxiv.org/abs/2108.00946)). This is referred to as the "**CLIP directional similarity**".

- Caption 1 corresponds to the input image (image 1) that is to be edited.

- Caption 2 corresponds to the edited image (image 2). It should reflect the edit instruction.

Following is a pictorial overview:

We have prepared a mini dataset to implement this metric. Let's first load the dataset.

```python

from datasets import load_dataset

dataset = load_dataset("sayakpaul/instructpix2pix-demo", split="train")

dataset.features

```

```bash

{'input': Value(dtype='string', id=None),

'edit': Value(dtype='string', id=None),

'output': Value(dtype='string', id=None),

'image': Image(decode=True, id=None)}

```

Here we have:

- `input` is a caption corresponding to the `image`.

- `edit` denotes the edit instruction.

- `output` denotes the modified caption reflecting the `edit` instruction.

Let's take a look at a sample.

```python

idx = 0

print(f"Original caption: {dataset[idx]['input']}")

print(f"Edit instruction: {dataset[idx]['edit']}")

print(f"Modified caption: {dataset[idx]['output']}")

```

```bash