license: other

tags:

- stable-diffusion

- stable-diffusion-diffusers

- text-to-image

- endpoints-template

inference: false

extra_gated_prompt: >-

One more step before getting this model.

This model is open access and available to all, with a CreativeML OpenRAIL-M

license further specifying rights and usage.

The CreativeML OpenRAIL License specifies:

1. You can't use the model to deliberately produce nor share illegal or

harmful outputs or content

2. CompVis claims no rights on the outputs you generate, you are free to use

them and are accountable for their use which must not go against the

provisions set in the license

3. You may re-distribute the weights and use the model commercially and/or as

a service. If you do, please be aware you have to include the same use

restrictions as the ones in the license and share a copy of the CreativeML

OpenRAIL-M to all your users (please read the license entirely and carefully)

Please read the full license here:

https://huggingface.co/spaces/CompVis/stable-diffusion-license

By clicking on "Access repository" below, you accept that your *contact

information* (email address and username) can be shared with the model authors

as well.

extra_gated_fields:

I have read the License and agree with its terms: checkbox

Fork of CompVis/stable-diffusion-v1-4

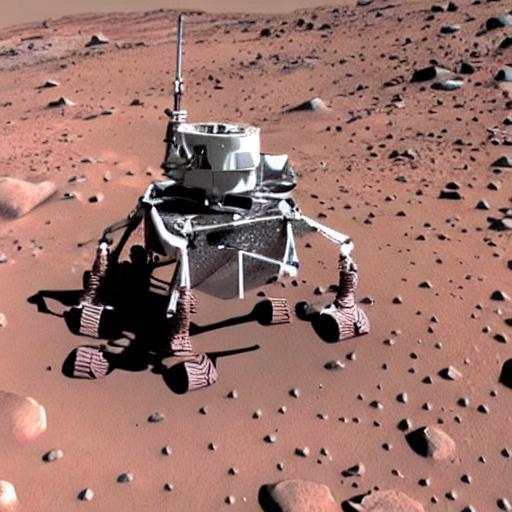

Stable Diffusion is a latent text-to-image diffusion model capable of generating photo-realistic images given any text input. For more information about how Stable Diffusion functions, please have a look at 🤗's Stable Diffusion with 🧨Diffusers blog.

For more information about the model, license and limitations check the original model card at CompVis/stable-diffusion-v1-4.

License (CreativeML OpenRAIL-M)

The full license can be found here: https://huggingface.co/spaces/CompVis/stable-diffusion-license

This repository implements a custom handler task for text-to-image for 🤗 Inference Endpoints. The code for the customized pipeline is in the pipeline.py.

There is also a notebook included, on how to create the handler.py

expected Request payload

{

"inputs": "A prompt used for image generation"

}

below is an example on how to run a request using Python and requests.

Run Request

import json

from typing import List

import requests as r

import base64

from PIL import Image

from io import BytesIO

ENDPOINT_URL = ""

HF_TOKEN = ""

# helper decoder

def decode_base64_image(image_string):

base64_image = base64.b64decode(image_string)

buffer = BytesIO(base64_image)

return Image.open(buffer)

def predict(prompt:str=None):

payload = {"inputs": code_snippet,"parameters": parameters}

response = r.post(

ENDPOINT_URL, headers={"Authorization": f"Bearer {HF_TOKEN}"}, json={"inputs": prompt}

)

resp = response.json()

return decode_base64_image(resp["image"])

prediction = predict(

prompt="the first animal on the mars"

)

expected output