Mistral-7B-v0.3-prune6

This is a layer-pruned pre-trained language model sliced with mergekit. No additional training.

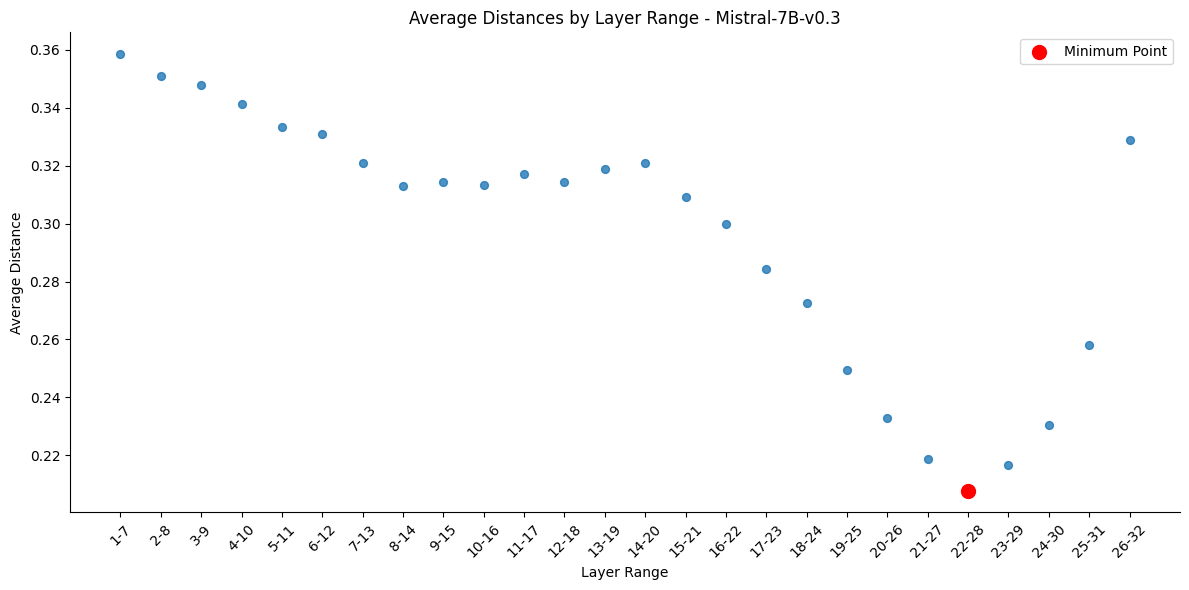

layer selection

Quick eval

Quick eval for: pszemraj/Mistral-7B-v0.3-prune6

hf (pretrained=pszemraj/Mistral-7B-v0.3-prune6,trust_remote_code=True,dtype=bfloat16), gen_kwargs: (None), limit: None, num_fewshot: None, batch_size: 2

| Tasks | Version | Filter | n-shot | Metric | Value | Stderr | |

|---|---|---|---|---|---|---|---|

| arc_easy | 1 | none | 0 | acc | 0.6393 | ± | 0.0099 |

| none | 0 | acc_norm | 0.6309 | ± | 0.0099 | ||

| boolq | 2 | none | 0 | acc | 0.7599 | ± | 0.0075 |

| lambada_openai | 1 | none | 0 | perplexity | 10.1184 | ± | 0.2771 |

| none | 0 | acc | 0.5507 | ± | 0.0069 | ||

| openbookqa | 1 | none | 0 | acc | 0.2200 | ± | 0.0185 |

| none | 0 | acc_norm | 0.3580 | ± | 0.0215 | ||

| piqa | 1 | none | 0 | acc | 0.7203 | ± | 0.0105 |

| none | 0 | acc_norm | 0.7350 | ± | 0.0103 | ||

| winogrande | 1 | none | 0 | acc | 0.6906 | ± | 0.0130 |

original

bootstrapping for stddev: perplexity hf (pretrained=mistralai/Mistral-7B-v0.3,trust_remote_code=True,dtype=bfloat16), gen_kwargs: (None), limit: None, num_fewshot: None, batch_size: 2

| Tasks | Version | Filter | n-shot | Metric | Value | Stderr | |

|---|---|---|---|---|---|---|---|

| arc_easy | 1 | none | 0 | acc | 0.7959 | ± | 0.0083 |

| none | 0 | acc_norm | 0.7832 | ± | 0.0085 | ||

| boolq | 2 | none | 0 | acc | 0.8202 | ± | 0.0067 |

| lambada_openai | 1 | none | 0 | perplexity | 3.2578 | ± | 0.0601 |

| none | 0 | acc | 0.7518 | ± | 0.0060 | ||

| openbookqa | 1 | none | 0 | acc | 0.3340 | ± | 0.0211 |

| none | 0 | acc_norm | 0.4420 | ± | 0.0222 | ||

| piqa | 1 | none | 0 | acc | 0.8009 | ± | 0.0093 |

| none | 0 | acc_norm | 0.8215 | ± | 0.0089 | ||

| winogrande | 1 | none | 0 | acc | 0.7380 | ± | 0.0124 |

Merge Details

Merge Method

This model was merged using the passthrough merge method.

Models Merged

The following models were included in the merge:

Configuration

The following YAML configuration was used to produce this model:

dtype: bfloat16

merge_method: passthrough

slices:

- sources:

- layer_range: [0, 22]

model: mistralai/Mistral-7B-v0.3

- sources:

- layer_range: [28, 32]

model: mistralai/Mistral-7B-v0.3

- Downloads last month

- 8

This model does not have enough activity to be deployed to Inference API (serverless) yet. Increase its social

visibility and check back later, or deploy to Inference Endpoints (dedicated)

instead.